WEEK 9

ARDUINO WORKSHOP

Presentation by Ashley Hi

Feelers Presentation

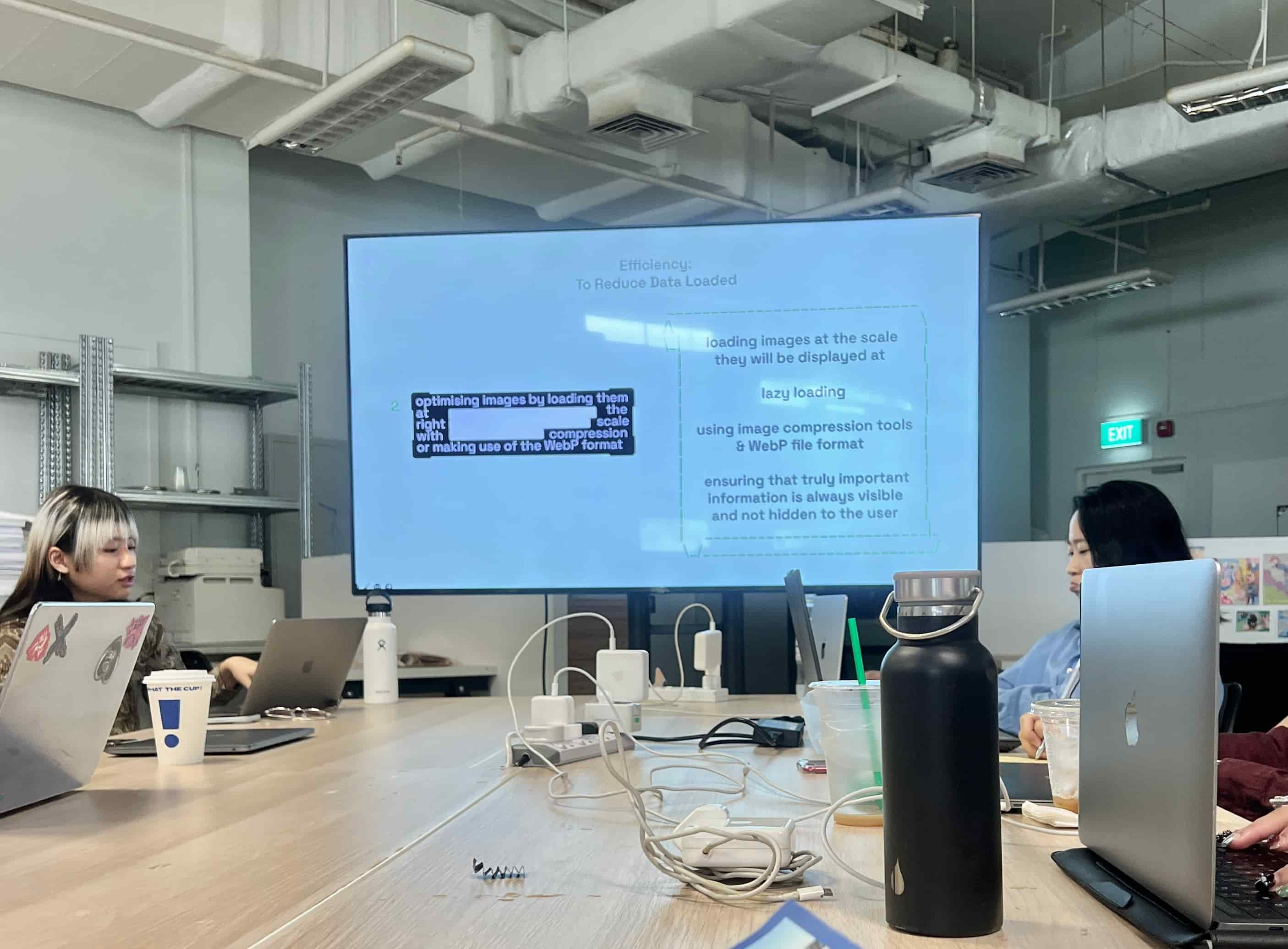

In this week, we had a guest presentation from Ashley Hi, the director of Feelers–a research lab of artists and designers working with the intersection of art and technology. Ashley brought in a great presentation from what they do and their process of working in collaboration with different creatives. Their approach to sustainability when working with technology shown on their works was also inspiring as well. She presented some mini tips of how to use energy more efficiently, for example, compressing your images and optimizing the loading space.

Another one that I didn’t even know was that using a white screen takes moref energy than using dark screen. It kinda hit me back because I prefer to see everything in white and I always turned off the dark mode everytime it changes. So theres something meaningful for me to change a bad habit.

AI: What does it look like?

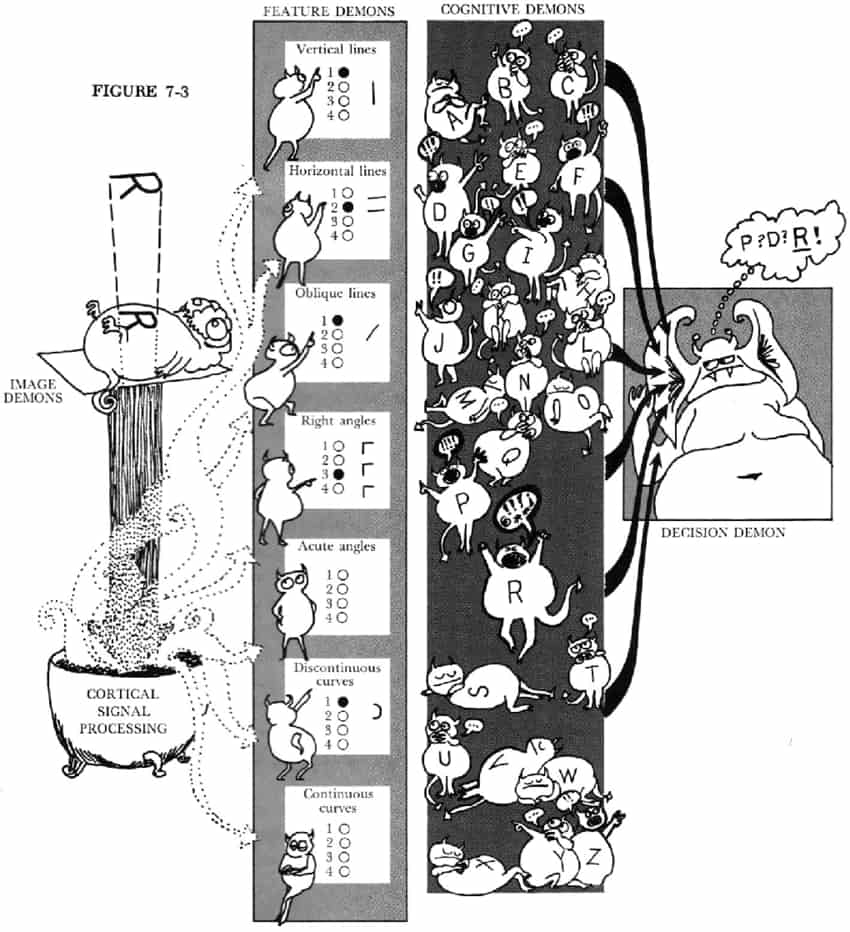

Ashley also introduced us to the concept of "pandemonium" created by Oliver Selfridge, which comes from a theory in cognitive science describing how the brain processes visual images. This theory was later developed and applied in artificial intelligence and pattern recognition; Selfridge describes this process as an exchange of signals within a hierarchical system of detection and association, the elements of which are metaphorically termed "demons".

I found the idea of pandemonium interesting because it serves as a bridge between biological and artificial systems. It leverages principles foundational to both how the human brain processes information and how AI systems are designed to recognize patterns.

If this metaphorical approach helps us understand how AI works, perhaps it could also be a way to conceptualize how human memory functions. Although I believe AI can never replicate the way we experience our memory, it’s possible to imagine our brains working with similar metaphorical "creatures," each with a specific task—like turning the light switches on and off. Perhaps, this could be one way to show how even the most complex system can be told in a more engaging narrative. Also, I think it could be useful in differentiating between human and AI memory.

Build-A-Caifan Generated food plate using machine learning

Overall...

Most of the projects she presented was quite diverse, and its interesting to see that these comes from a collaborative space of people working from different backgrounds. She mentioned the collaborative process of how a project begins, like is it from one person who initiate the idea, or does it come naturally, and she explained how their way of working is to bring everyone’s interest together, and a discussion was made by all of them looking at what theyre interested in, and eventually finding this middle ground that they can work on.

And most of the works that they have done are quite exploratory and fun. Like this build your own caifan project where they trained a machine learning model with image dataset of caifan plates, and creating your own caifan with image generation.

Analog Rotation Patching Arduino

Dance with AI Using p5js to interact with Gemini model

Arduino Workshop

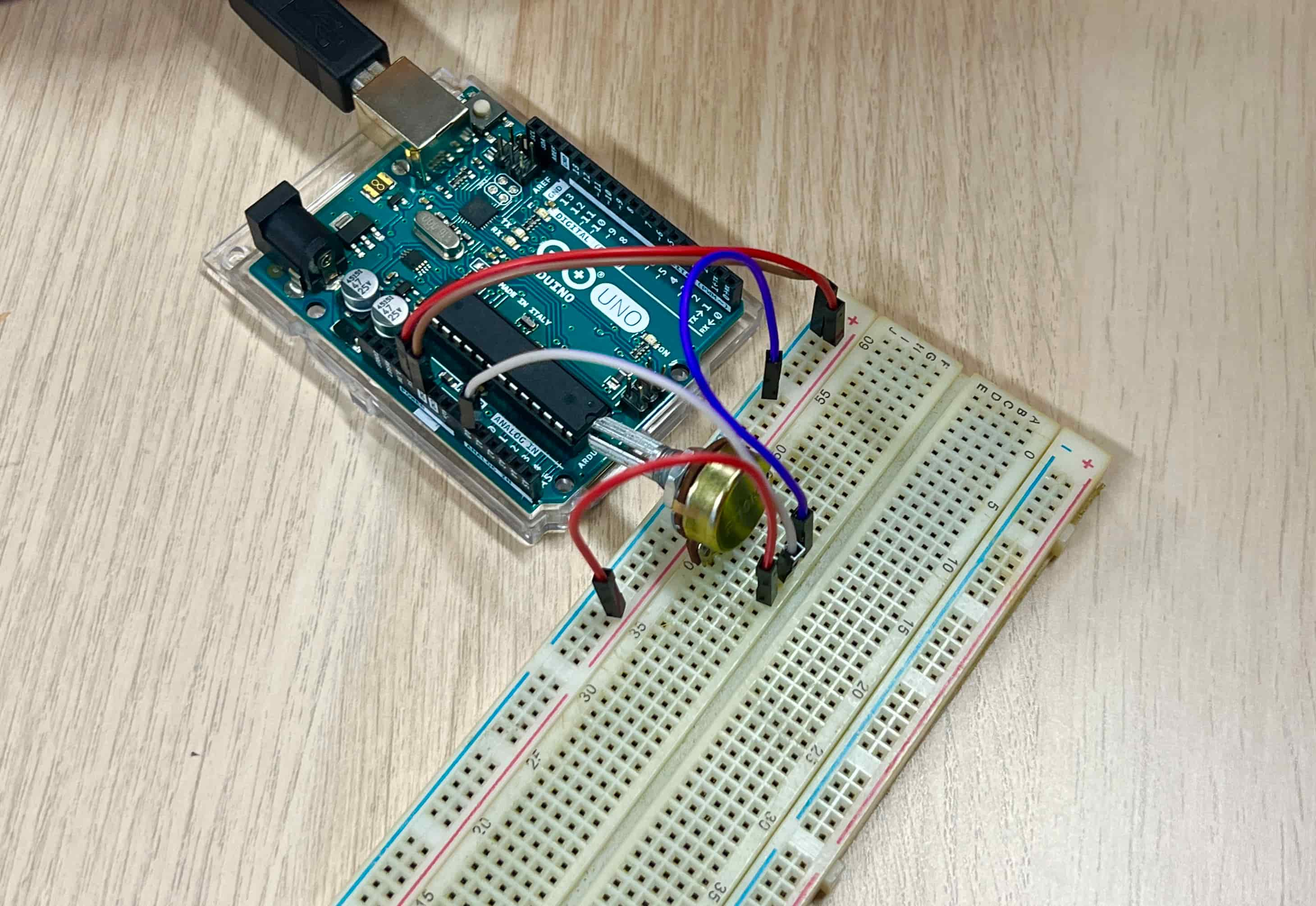

We also had a three hour sessions with Andreas from the CID lab, giving us a bit of exercise working with arduino and so on (Most people are interested in the arduino). We had a small demonstration and a bit of exercise in patching up the arduino. It was basically just a little recap of what we have done from the first year, and also some new tools that Andreas shared as well. Like this sketch code that he showed us where you can integrate an AI model to your p5js sketch code. You can basically prompt them and integrate them into your visual to create mini interactions with the model.

After the Arduino workshop, I found the collected data quite interesting. It sent me back to the first task I did which was quantifying something that cannot be quantified (memories), what if I can transform this data that results from our present experience into data that can be visible to the machine? This made a starting point for me to do another experiment.

Using an Accelometer Sensor to convert motion data

Turning Analog Data to Pixel Data

I tried a mini experiment with the accelerometer that Andreas had, turning the analog data into pixel data using p5js sketch, which later on will create an image using that data. The idea was to visualize experience and moments, small meaningful interactions that contribute to our memories. If a hug (an experience) could be visible to a machine, it would look like this (an image generated).

With Andreas' help, I managed to connect the arduino to p5js sketch code, where in there I mapped the collected motion values (x,y,z,...,...) to RGB values (x,y,r,g,b). I also borrowed Andreas' object that is attached with the sensor to help me test the sketch code. There were some few errors during the process, like somehow the mapped values translated into not a number and so there aren't much of pixel data collected; however, at the end most of the technical issues were solved thanks to Andreas.

RGB Pixel Values converted from motion data

Quantifying Movement

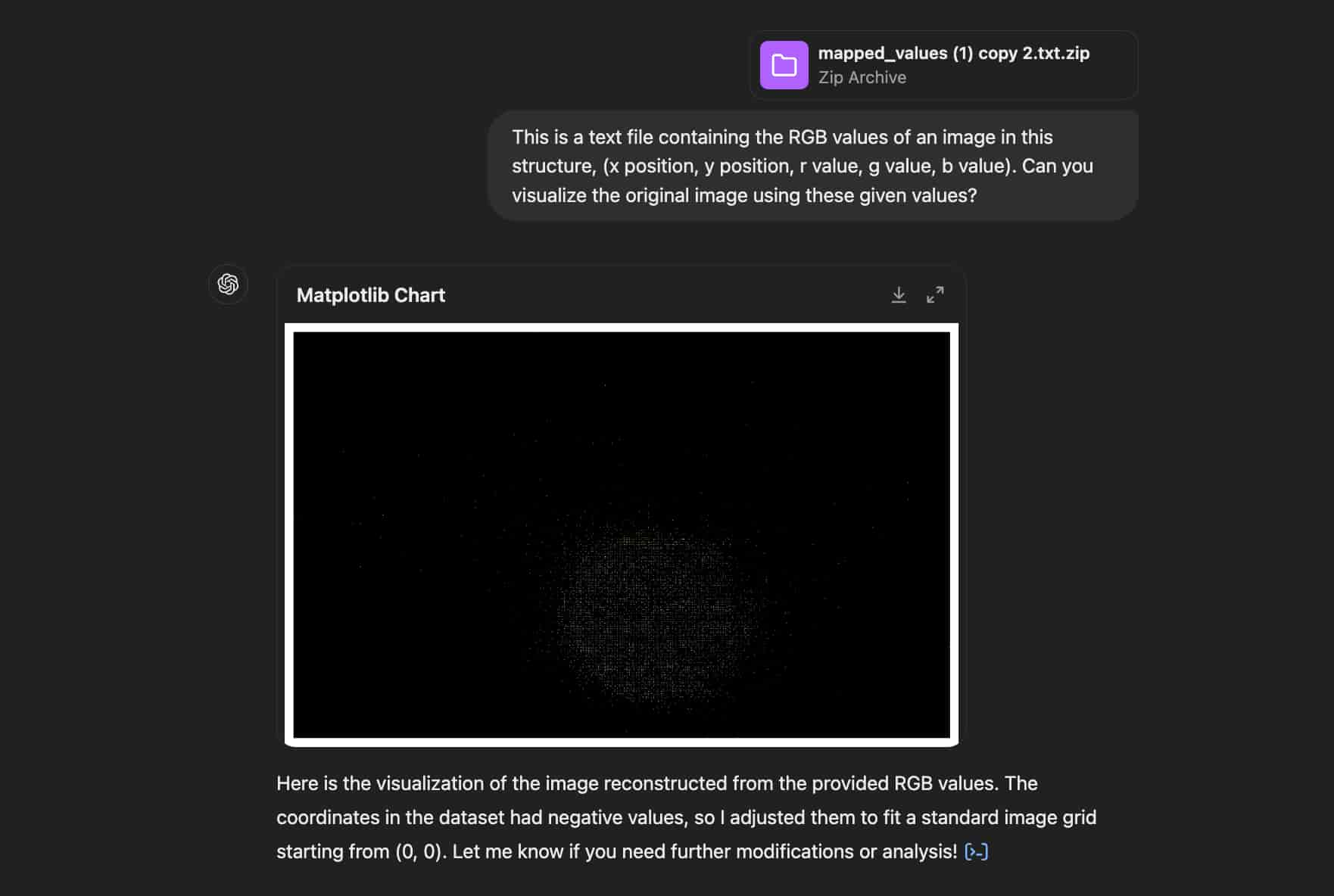

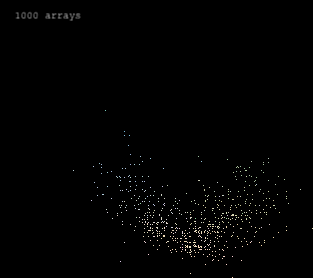

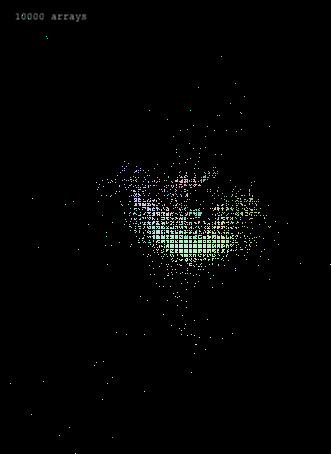

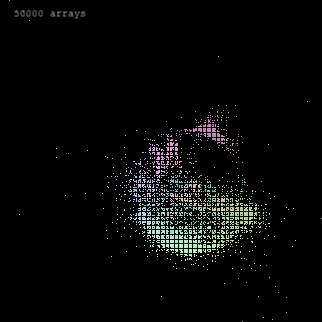

Then, I collected the mapped values and kept them in text file, which then I planned on using this data to construct an image using ChatGPT.As I convert the motion values, I got to specify how many arrays of pixel values I want to collect, and I collected a few of data ranging from 1000 arrays to 50000 arrays. This arrays of pixel values will then determine the dimensions of the image that will be generated.

Generated Images from the RGB values

ChatGPT's Visualization

Since the x and y position inside the RGB values doesn't come in sequence like normal image, there are some blank spaces and so the colors were like dotted rather creating a full image filled with the pixel values. The images presented here shows ChatGPT's visualization of the motion data. It construct the image based on the RGB values I provided. Some images come in different dimensions, this may be caused by the different number of array of pixel values I collected.

Moving Forward...

I quite like the results of the images I created with ChatGPT during the workshop. I believe I could experiment with the sensor and sketch code a little bit more. For the next experiment, I thought I could start collecting more meaningful interaction, such as a hug, reading, writing, or maybe small interactions like flipping a book. The only thing I need to think of is how am I going to collect this movements by using the accelometer sensor. Because it is connected to the circuit by solder, it is quite fragile. Therefore, I need to handle it with care.

Overall, I thought the workshops were enjoyable and I think this experiment could be further develop, creating more visual experiments to translate small moments into images.