WEEK 13

DECISION SPACE

Transcendence (2014) Johny Depp Turning into AI

Revisiting Research Question

In this week, I had a consultation with Andreas which I clarified more of my research questions for my dissertation. It felt like there are too many thoughts in my head, and I'm not sure what to prepare for the final delievarables (prototype) so me and Andreas had some discussions in simplifying some of my thoughts.

What's my research point?

Although its pretty clear that I wanted to differentiate between human and machine memory, the discussion I had with Andreas reminded me of the point I had and should focus to move forward. My point? To simplify it, machine learning models can only mimic human abilities, and they are unable to understand what an experience is unlike how human experiences tied to emotional and subjective understandings.

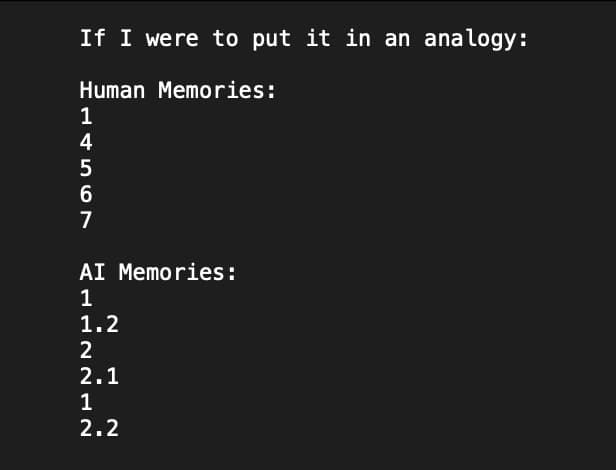

Human memories are more organic and unpredictable whereas AI memory, although can be generative, can be repetitive sometimes, becoming less unpredictable which what I believe makes it look artificial. If I were to put it into an analogy, when you ask questions to someone, the same person will answer differently in different times, making the output unpredictable (it can be 1,4,3, sometimes 6). While for AI, it can be different for each time, but the essence is still the same, or in other words, they are very repetitive (it can be 1, sometimes 1.2, sometimes 2 but it can be 1 again).

As I discussed this opinion, Andreas mentioned that maybe I could look into sentient AI for my contextual research. I got reminded of the movie Transcendence played by Johny Depp in which it speculates a world where AI can become sentient and can have its unconciousness. Although I have thought about it, I still believe that my point is still valid, and I could find a better point than linking it to sentient AI as the main context of the research.

Decision Space for The Photographers Gallery

Decision Space

For my next meeting, I decided to create another experiment that supports my opinion on how do I reflect the boundaries between human and machine memory? How do I simplify my thoughts so that other people can understand what I am trying to say through my visual experimentations?

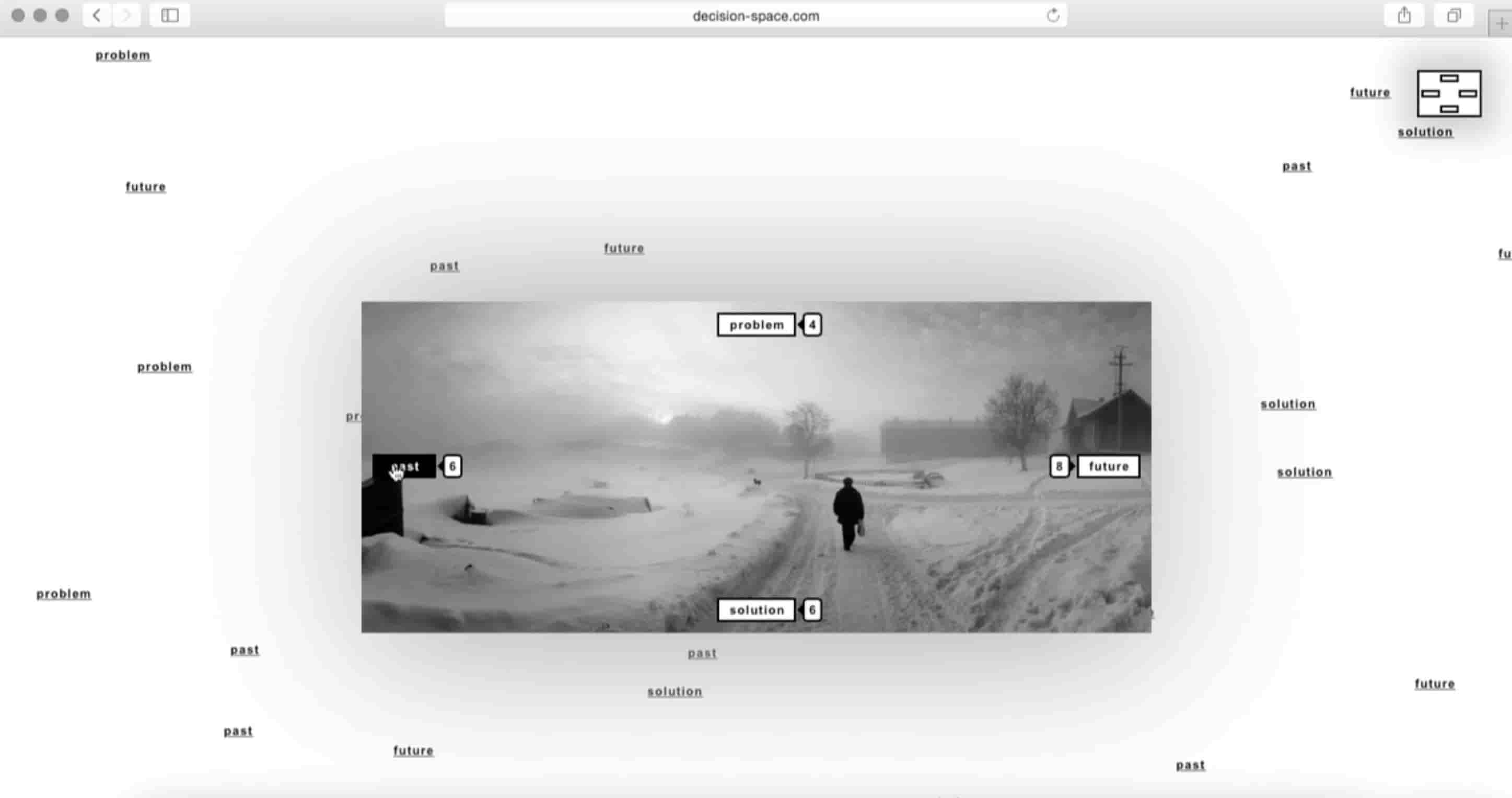

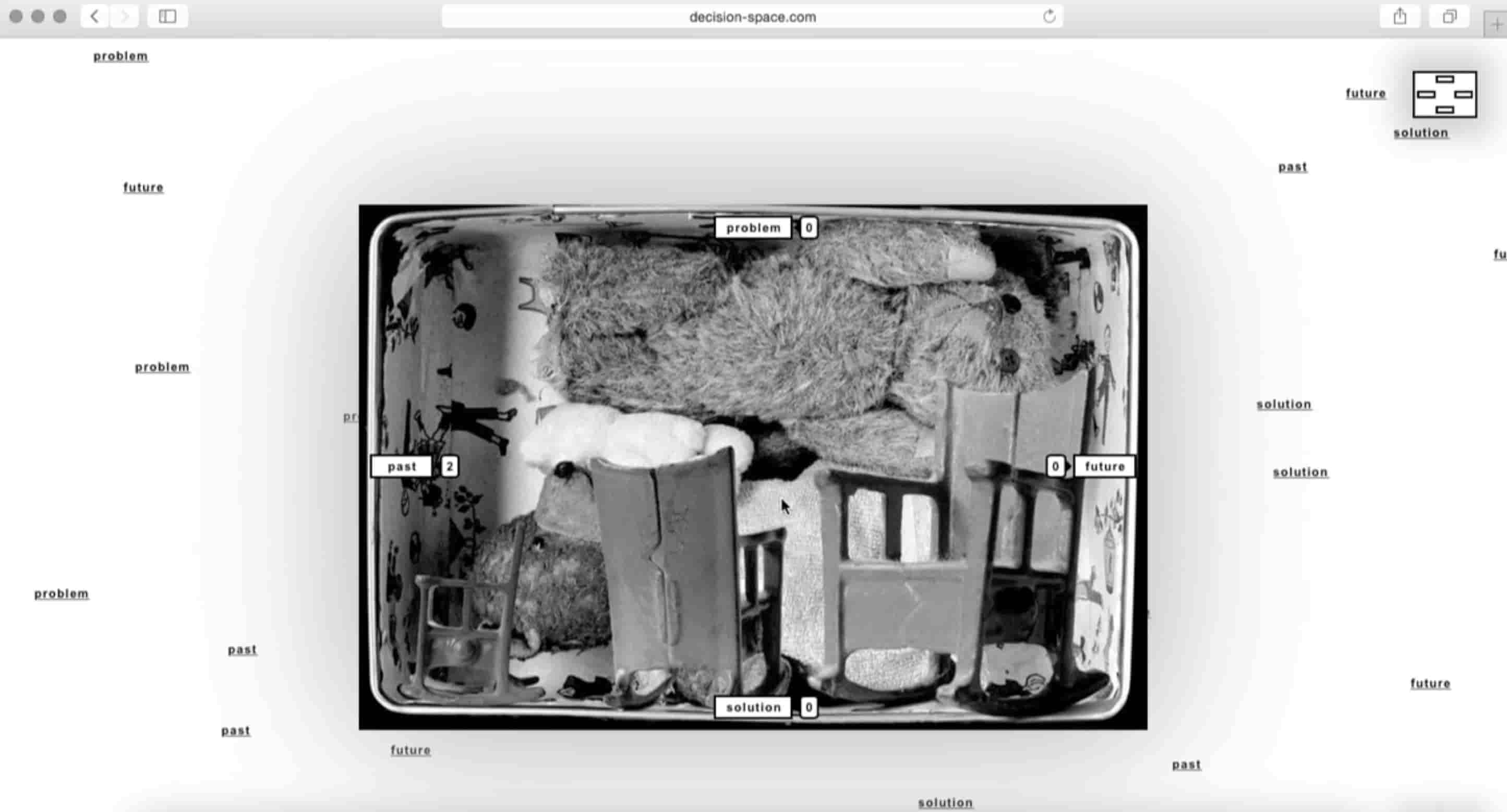

I came across this project called "Decision Space" that is used as part of The Photographers' Gallery website. The project raises questions around photography, big-data, surveillance, the hidden manual labor behind artificial intelligence and the biases embedded in algorithmic systems.Visitors were invited to assign all the images available on the gallery’s website to one of four categories: Problem, Solution, Past and Future, without any context given other than the images.

What makes this project even more interesting is that the decision space in which the visitors classify the images is just one part of the installation. The main part is where it looks back as a trained neural networks categorize the people and their photos through what it has learned, following the same concepts and biases which it was taught from the image dataset.

Decision Space with Gemini model integrated with API

Experiment

Decision Space with Gemini

From the previous reference, I liked the idea of not giving the visitors any context and ask them to classify these images based on what they think these images are belong to. Therefore, I decided to create my own decision space where I envisioned people and the AI machine could classify the image side by side.

For the images, I decided to use images from popular movie scenes which I believe evoke more humans diversity in the responses, when compared to AI. Theres no right or wrong answer within this decision making space, but I wanted to make a comparison between what humans and AI think about these images and how do they classify them will be compared in the results.

I selected some images that are simple and straightforward from movies that I believe people might recognized. I integrated a Gemini model using the API key to my website with the help of available sketch code template from Andreas. Initially, I thought about doing the classficiation side by side (human versus machine). However, due to the technical issues I had, I could only accomplish the decision making for the Gemini model.

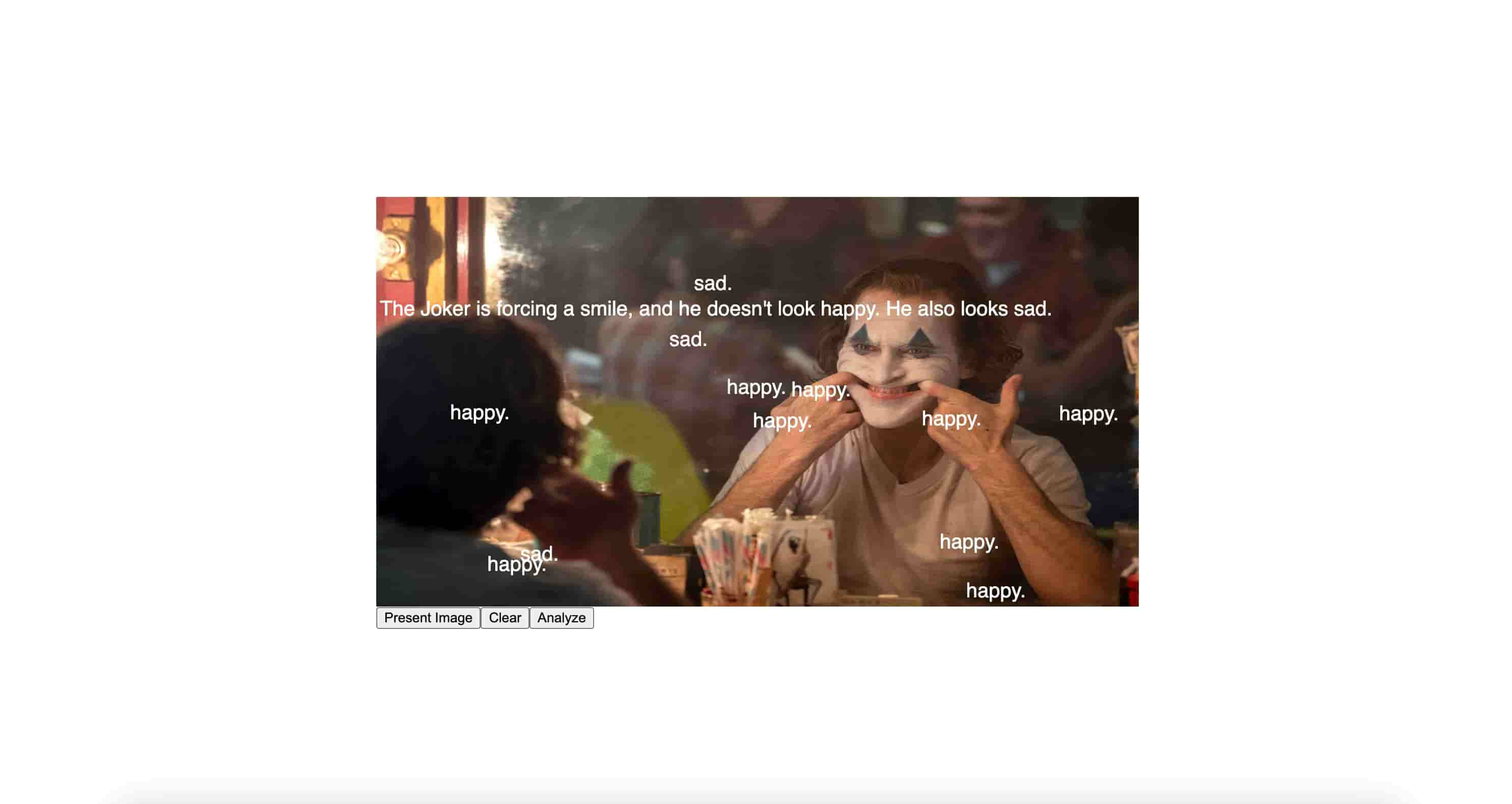

So I gave it a prompt to Gemini: "Categorize this image based on "happy" or "sad". My initial assumptions were that these machines would only detect what is happening in the physcallity of the image (like how bright the colors in the imge are, what are the emotional expressions that it could detect, what are the objects on the background) While for people, these images could be categorized based on their contextual understanding or emotional resonance they have with these movies that they have watched and recognized.

However, it seemed that the result was a bit unexpected than what I assumed. As you can see in one of the images, the AI has quite good understanding who the Joker is. Even if the face it makes looks like its smiling. It has a better understanding of what the character is feeling (which probably it learns and trained using dataset from the internet). This reminds me that the machines that I am using is not as stupid as I thought, and probably it has biases as well.

Failure I had when integrating the Gemini API to my website

Feedbacks & Reflections

It seems that the idea behind the decision making space seems a bit too far-fetched for other people to understand what I am talking about. I thought making this website would be straightforward enough to refelct on what makes us so different from machines. However, it seems like its still too abstract when I presented this to Andreas.

Although I achieved what I was aiming for in technicalities, I felt like the result from this experiment didn't really satisfy me personally in conceptually as well. Hence, this experiment should just stay as an experiment.

Moving forwards, I should focus on preparing for my prototype, which was still my biggest problem to solve. During this hard times, maybe I should also try to reflect on each of the experiments I had so far to get better reflections and hence it will help to compile all of my works together.

What Andreas suggested me to do was to create a narrative for my experiments, like a string that connects them together. A narrative can be written in a paragraph, however, people should be able to experience this narrative not just by reading it but also experiencing it through the experiments.