WEEK 7

SEGMENTING IMAGE

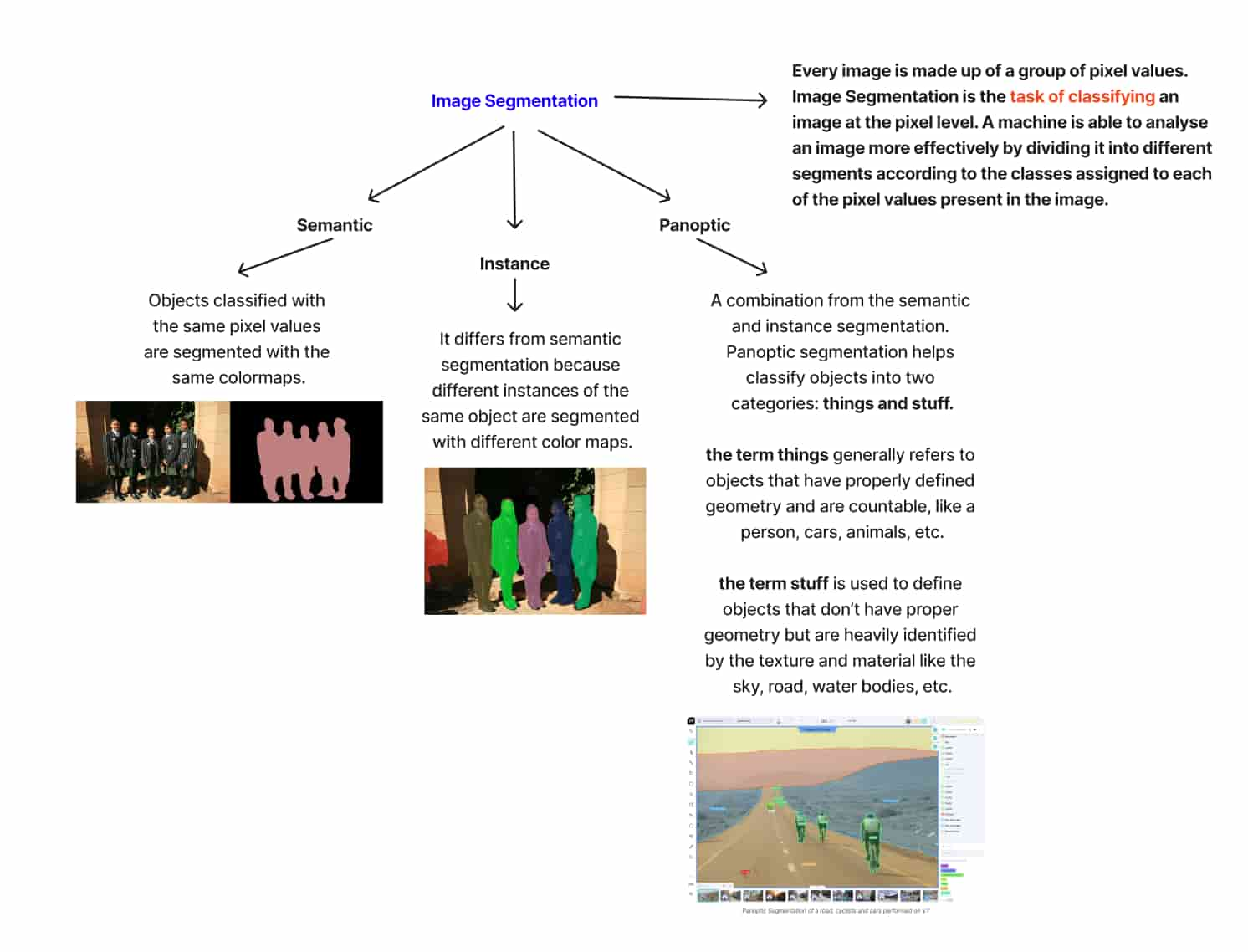

Types of Segmentation Semantic, Instance, Panoptic

Understanding Image Segmentation

This week, our goal was to present all of our past experiments in a table setup. With some extra time beforehand, I did an additional experiment on image segmentation to gain a better understanding of the technique.

What is Image Segmentation?

In this week’s experiment, I explored image segmentation—a technique that divides an image into meaningful regions or segments, simplifying or altering its representation to make analysis easier. This process is commonly used in machine learning for tasks such as object recognition, image analysis, and background removal. There are three main types of segmentation, each has a specific role in segmenting an image.

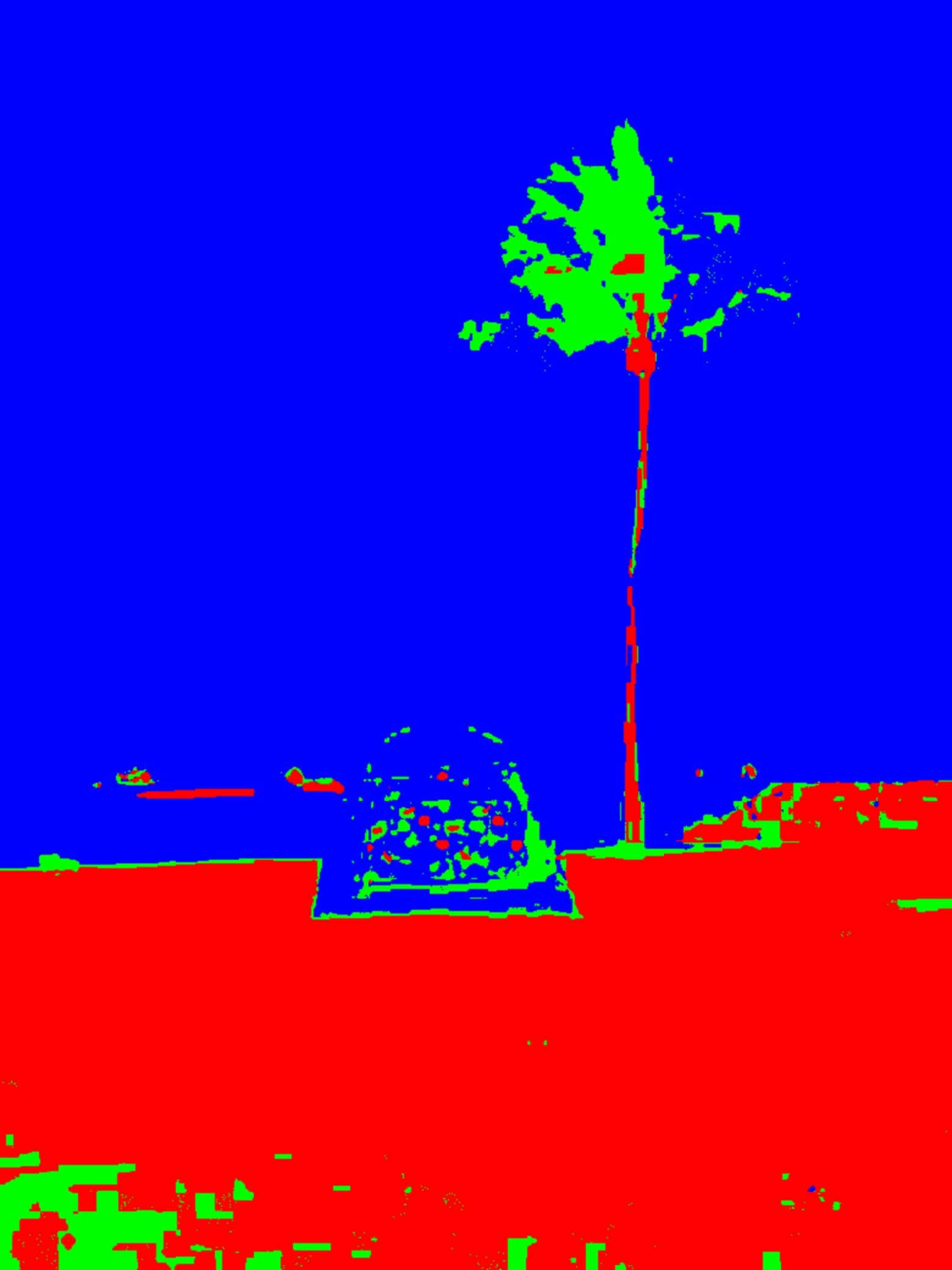

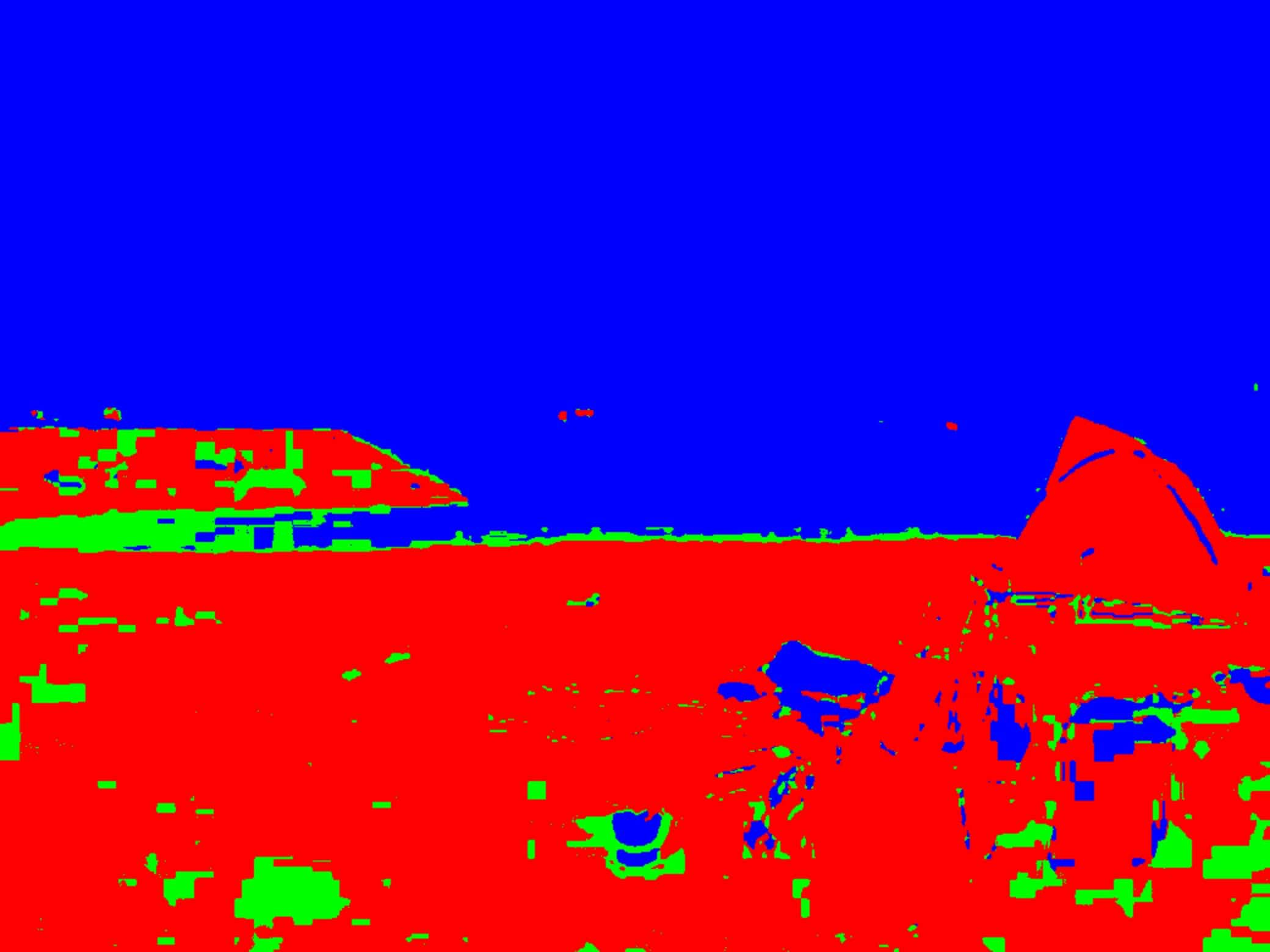

Results from color segmentation

Experiments

Color Segmentation

For my task, I didn’t want to dive deeply into the various types of segmentation used in machine learning, as this was my first time exploring image segmentation. Instead, I used color-based segmentation to simplify the image by grouping similar colors into the reference colors I have provided (Red, Green, Blue):

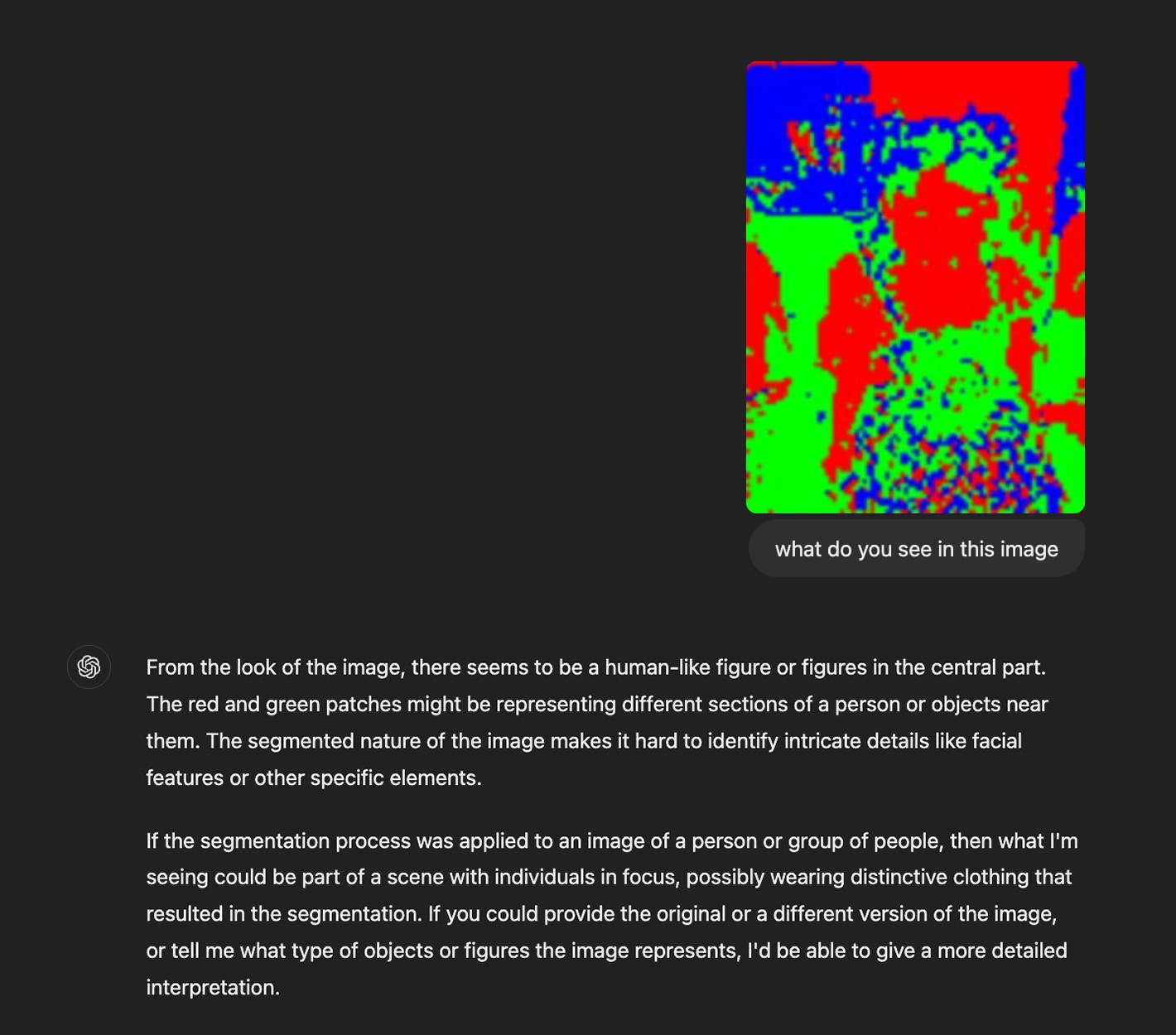

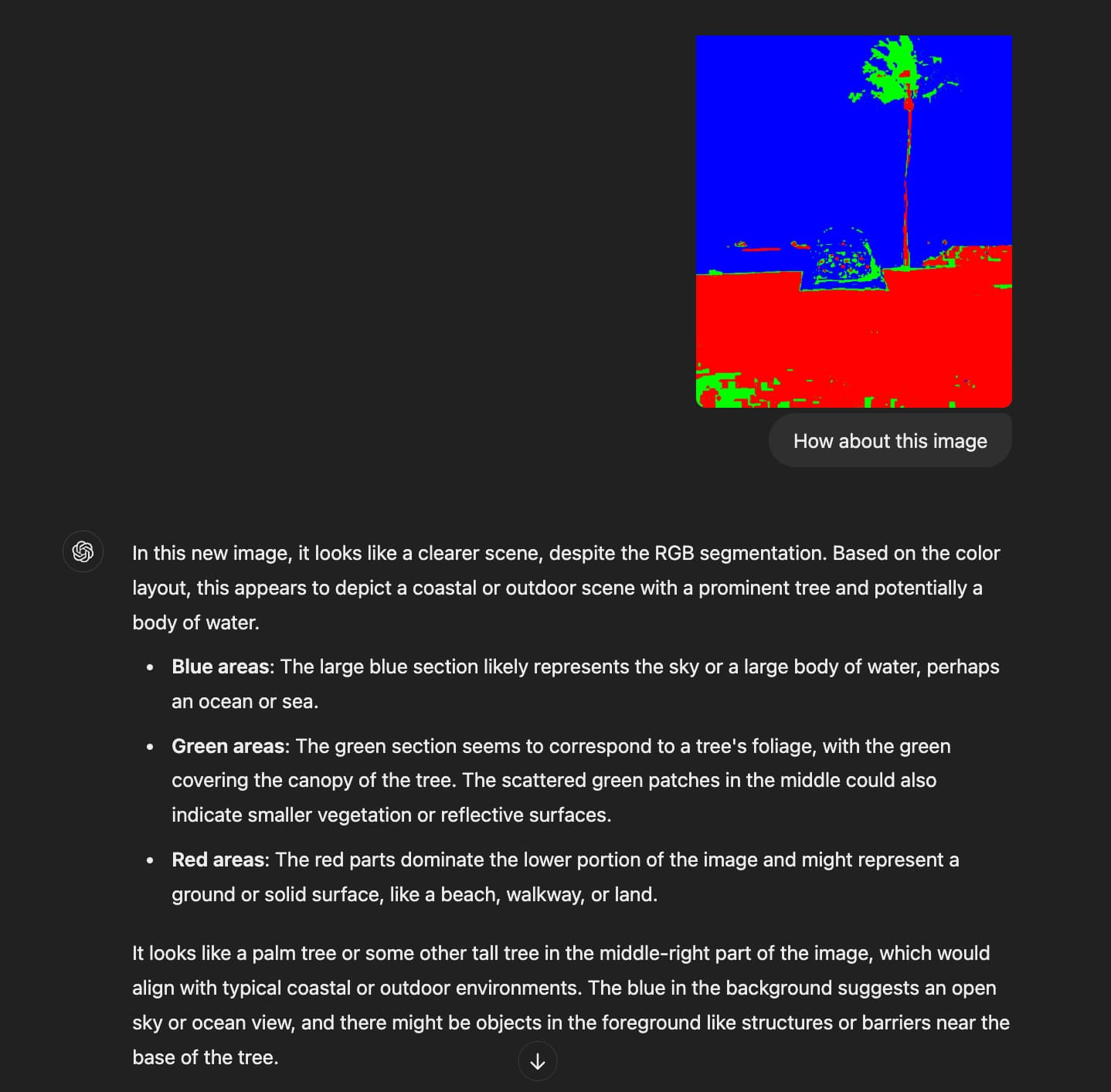

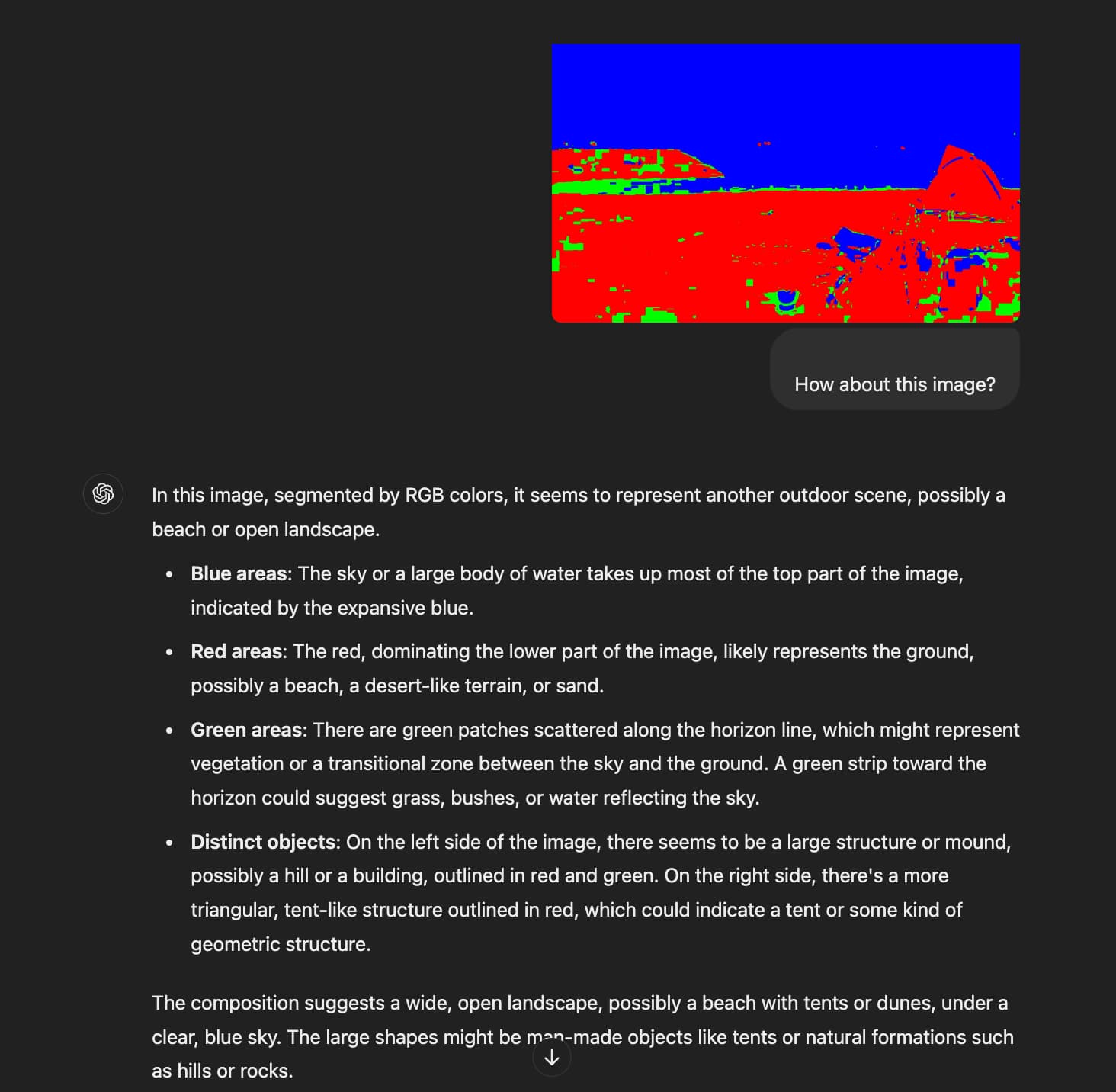

If the image contains various shades of red, green, or blue, the code groups all similar shades into one of these three reference colors, simplifying the image to red, green, and blue segments. I tested this approach on three different images, each has different objects. The resulting segmented images were then fed to ChatGPT to analyze its interpretation based on these color segments.

The goal of this experiment was to see what details the segmentation process includes or not, as interpreted by ChatGPT. Since I’m using a basic segmentation technique (a foundational approach compared to those used in complex machine learning tasks), it’s easier to test a machine learning model’s ability to interpret what it "sees" through this pixel data, particularly as the results are quite abstract.

How effectively can color segmentation alone distinguish between different objects in an image, and what are its limitations compared to more advanced techniques?

If a machine interprets an image as clusters of color rather than distinct objects, could it actually "see" finer details than humans? While we might not recognize the segmented image without seeing its original image, a machine might have a better chance of identifying elements like objects and scenery based on colors alone.

Without scrolling down to see the original images, what do you see?

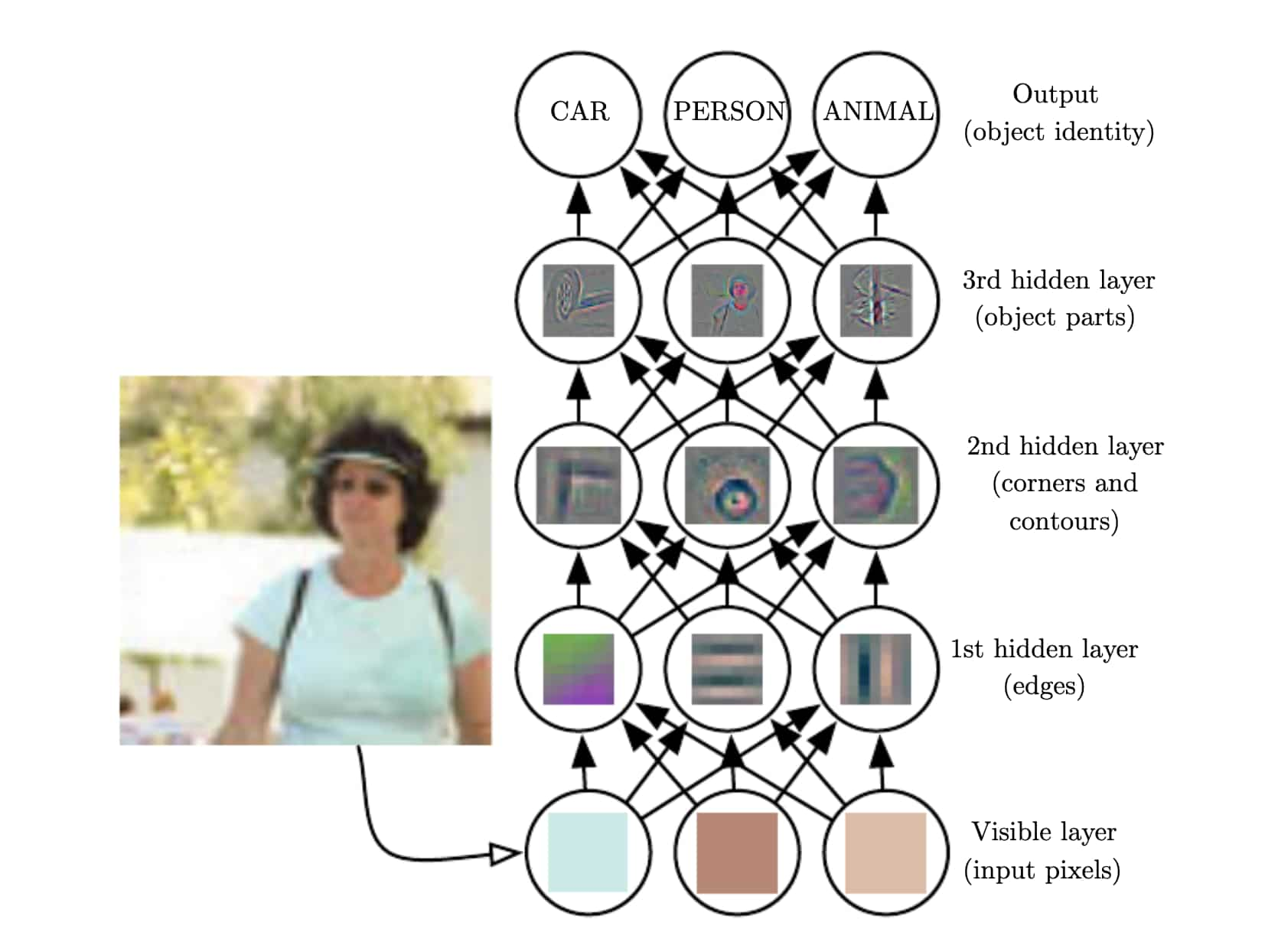

Illustration of a deep learning model from Deep Learning (Ian et al.6)

Process

I started by manipulating color references to create iterative variations, helping me better understand how color segmentation works. From these images, you can see how some parts appear more yellow, others green, depending on the references I used. I found it interesting how this technique could be applied to understand the spatial structure of objects within an image.

Simple Understanding of Deep Learning

Just to clarify, what I'm doing here is very basic, as image segmentation is far more complex than just measuring raw pixel data. As illustrated in the process behind a deep learning model (shown in the diagram), interpreting raw input data is challenging for a computer. Mapping pixels to identify objects is a complex task.

This is where deep learning is needed, breaking down complexity into a series of simpler mappings, each represented by different layers of the model. The input passes through what are called visible layers, while values extracted from the data are processed in multiple hidden layers.

Original Image before segmented by RGB pixel colors

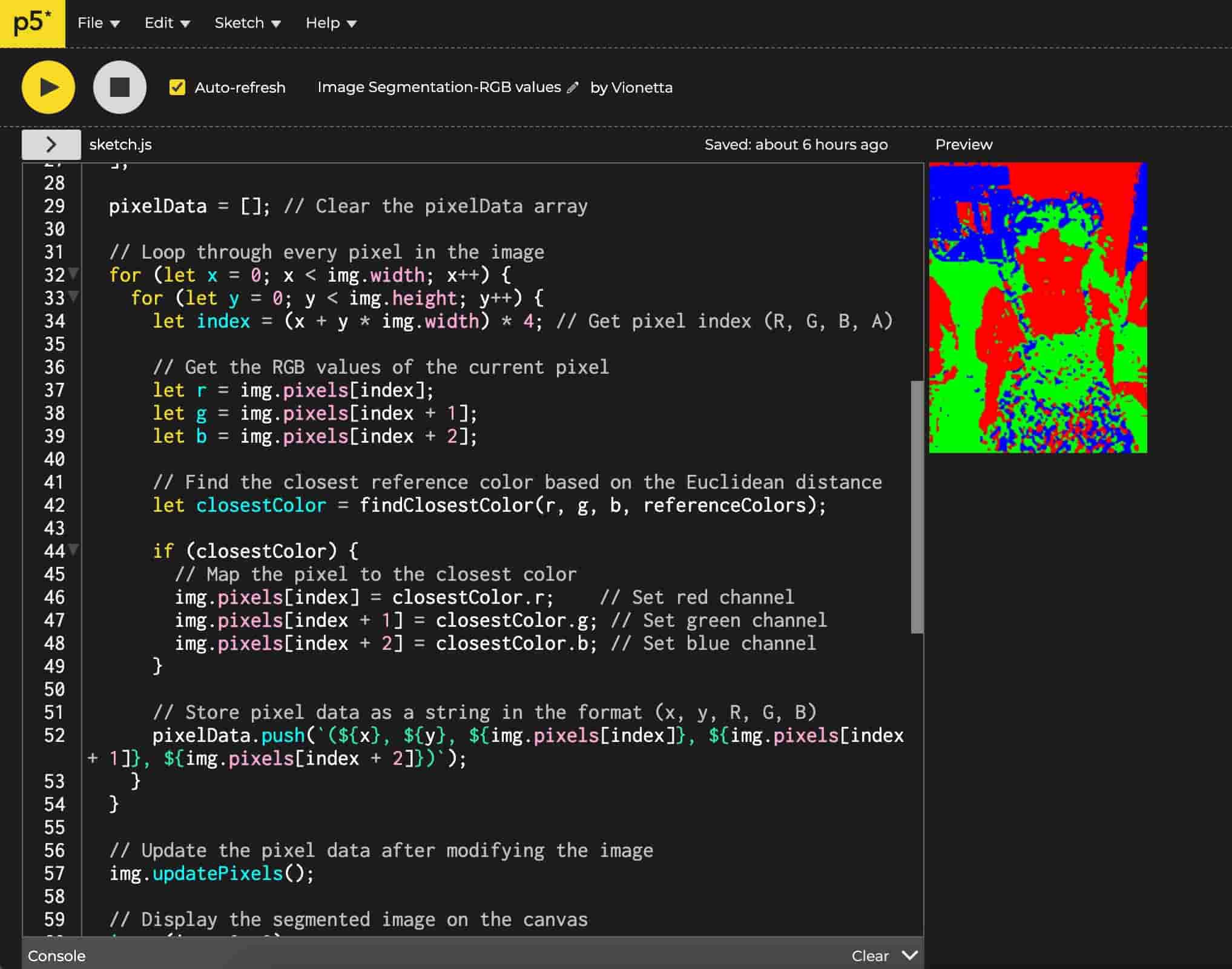

Sketch Code

To explain the technical aspect, I used three different images and input them into a sketch code I created in p5.js. The code works by grouping the RGB values of the image based on color similarity. Using a reference color in the sketch, it segments the image into color groups aligned with the red, green, and blue reference colors. This sketch code offers a simple way to understand color segmentation by focusing on just three distinct colors: red, green, and blue.

ChatGPT's Responses Inputting for Image Analysis to ChatGPT

Reflections

Although the process is straightforward, I really like the abstract look of the segmented results. I'm curious to see if other people can identify what’s happening in the segmented image without seeing the original, which could help answer my question of whether a machine might actually “see” better than we do through these segmented images.

Moving Forward...

To be honest, the main purpose of these experiments was just to understand image segmentation as a technique. This was basically a technical exercise; I didn’t have time to expand it further, as I had to prepare my table for formative feedback sessions by the end of the week.

I believe that I learn best through makings, creating these small tasks for myself and continuously questioning as I develop the tasks. While this approach is somewhat risky since I don’t always know what I’ll end up making, I enjoy the curiosity it gives me. However, I realize I still need to improve on reflecting on these activities, as it might be hard to connect them back to my research without a structured reflection process. Therefore, for the next weeks, I planned to find a methodology that helps me reflect effectively on my experiments as I continue making.

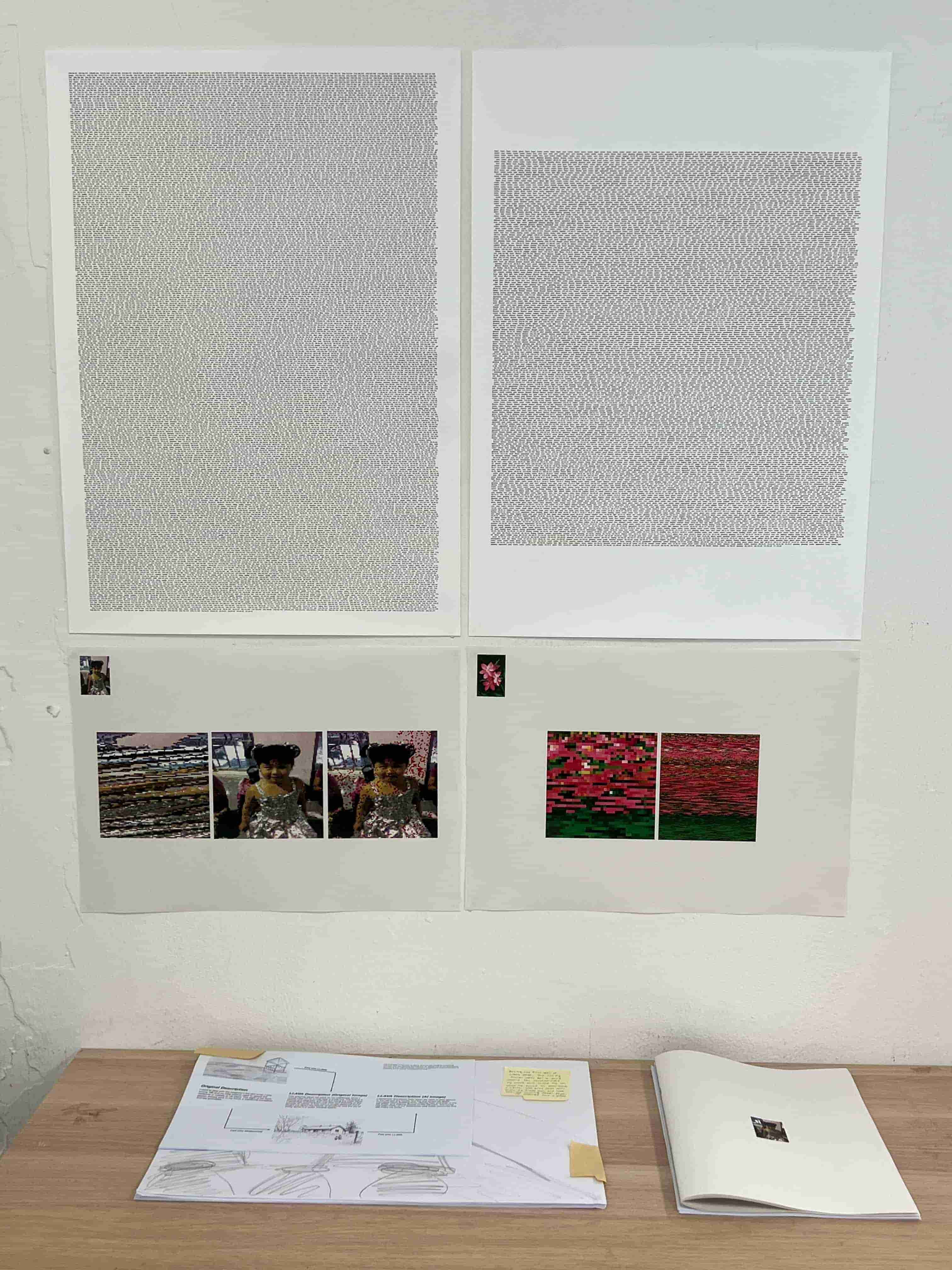

Table Setup

I started compiling my experiments into slides

I thought that this session would be quite intense, but I found it enjoyable as we got to listen to each other's projects—some even had interactive setups. It felt like a mini open studio, we got to look around and see each other's progress.

One takeaway, not only from Andreas’ feedback to me but also relevant to others, was the importance of focusing on doing rather than continously imagining picture-perfect ideas. It’s easy to imagine the perfect plan, but executing the plan can be frustrating if it goes beyond our limitations. Although I still have a lot to work on, I feel satisfied with what I’ve accomplished so far and look forward to further refining my approach and methodology for my research proposal outline.

References

—-Goodfellow, Ian, et al. Deep Learning. Cambridge, Massachusetts, The MIT Press, 2016, www.deeplearningbook.org/.