WEEK 6

FINAlIZING DIRECTION

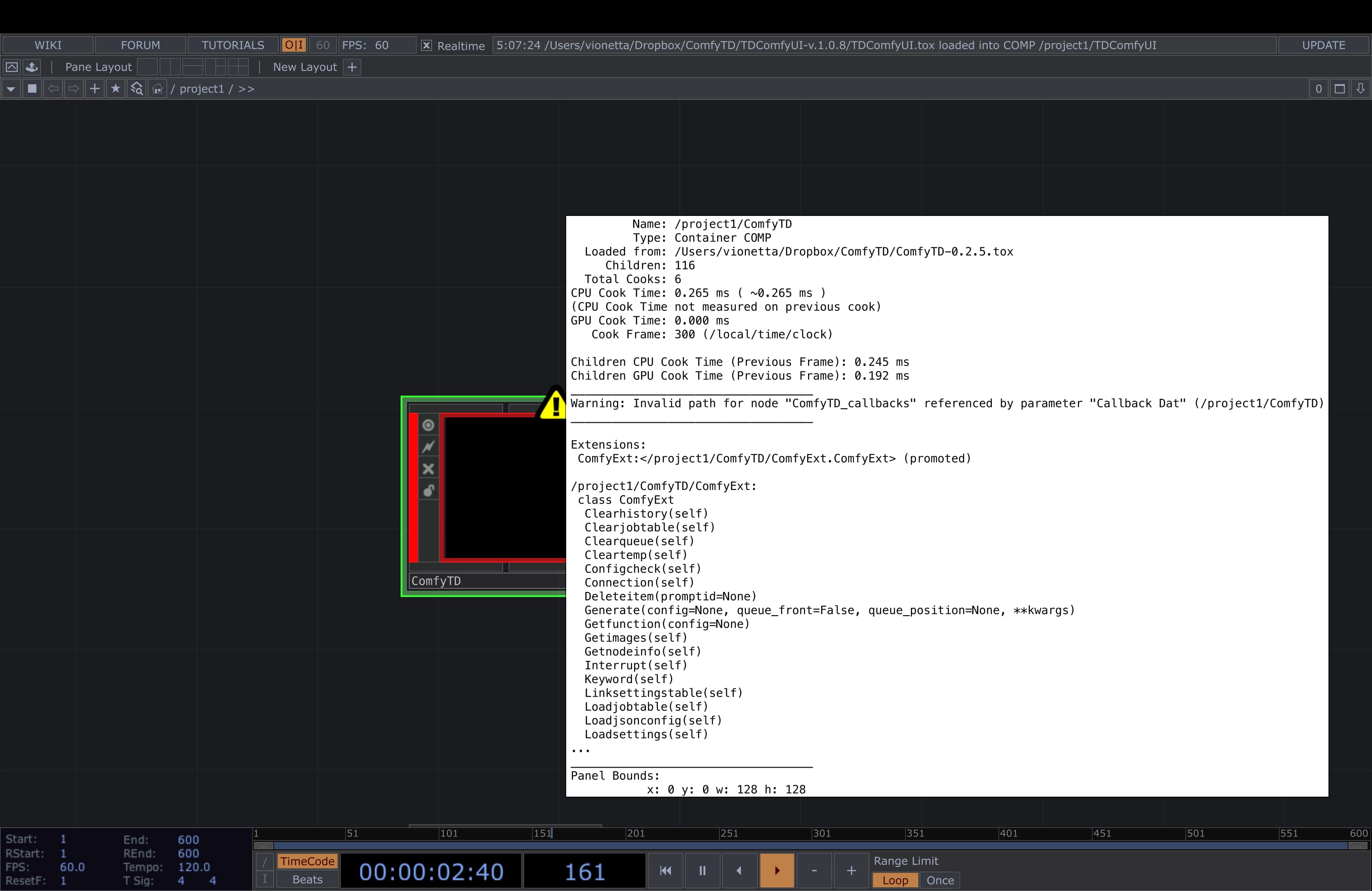

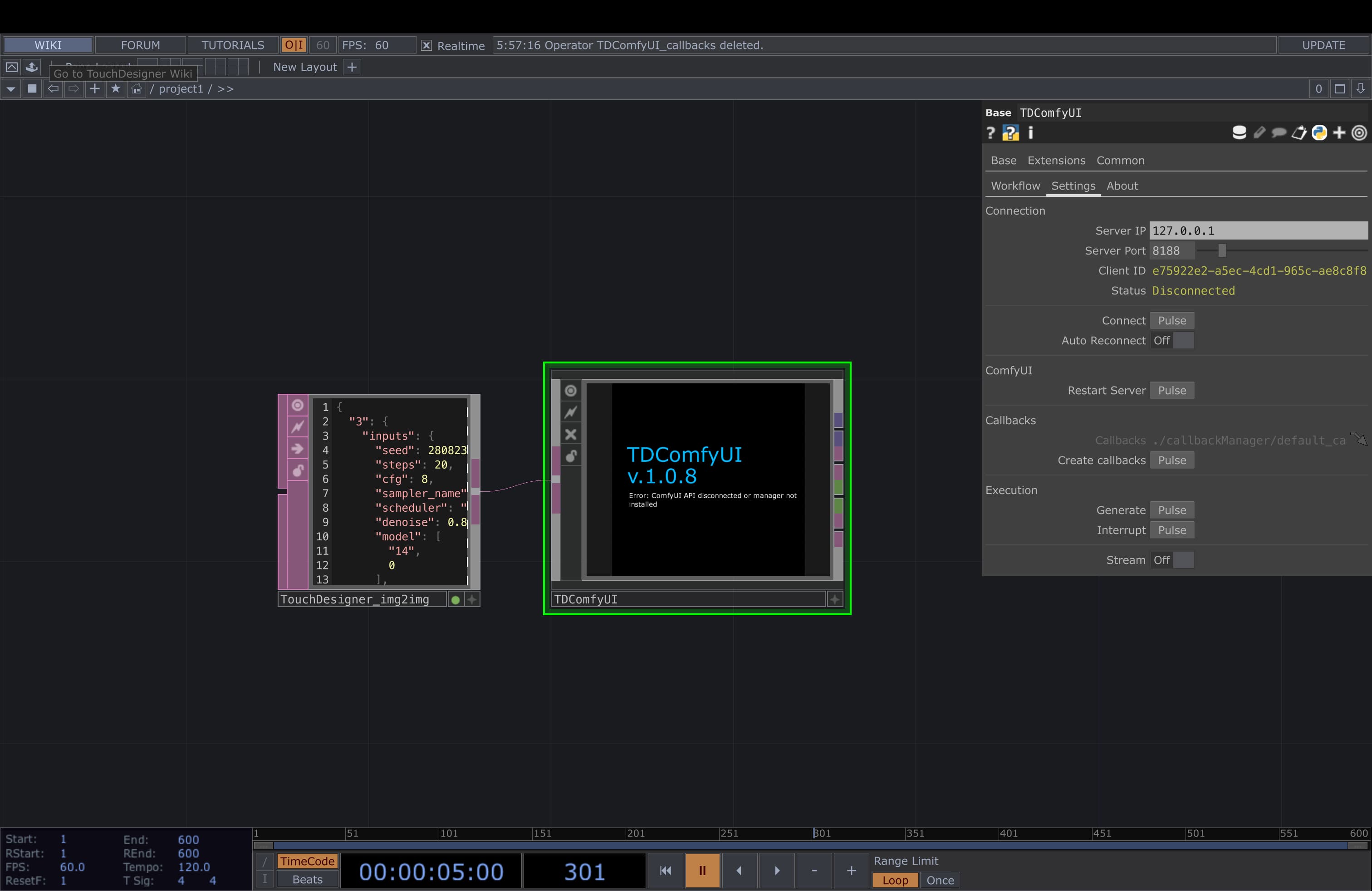

ComfyTD Operator by DotSimulate

ComfyUI + TouchDesigner

Unsupported Version

I tried using this extension resource downloaded from this artist’s Patreon. This extension allows you to send images and generate images from your comfyUI workflows to TD interface through API. It has controls parameters for the queue timing and lighter performance stability on the generation process.

The reason why I wanted to integrate my workflows to TD interface is because first of all, I would like to create an interface where my visual outputs can be display on clear windows without opening the directory path file; and secondly, I would like to explore the visual outputs using TD visual effects. With TouchDesigner capability to integrate external inputs with the operators, such as a motion tracking and audio reactive, I wanted to see what I could do to create my own generative experience. Designing the interface on TouchDesigner would be easier as well, as I have basic knowledge on the program.

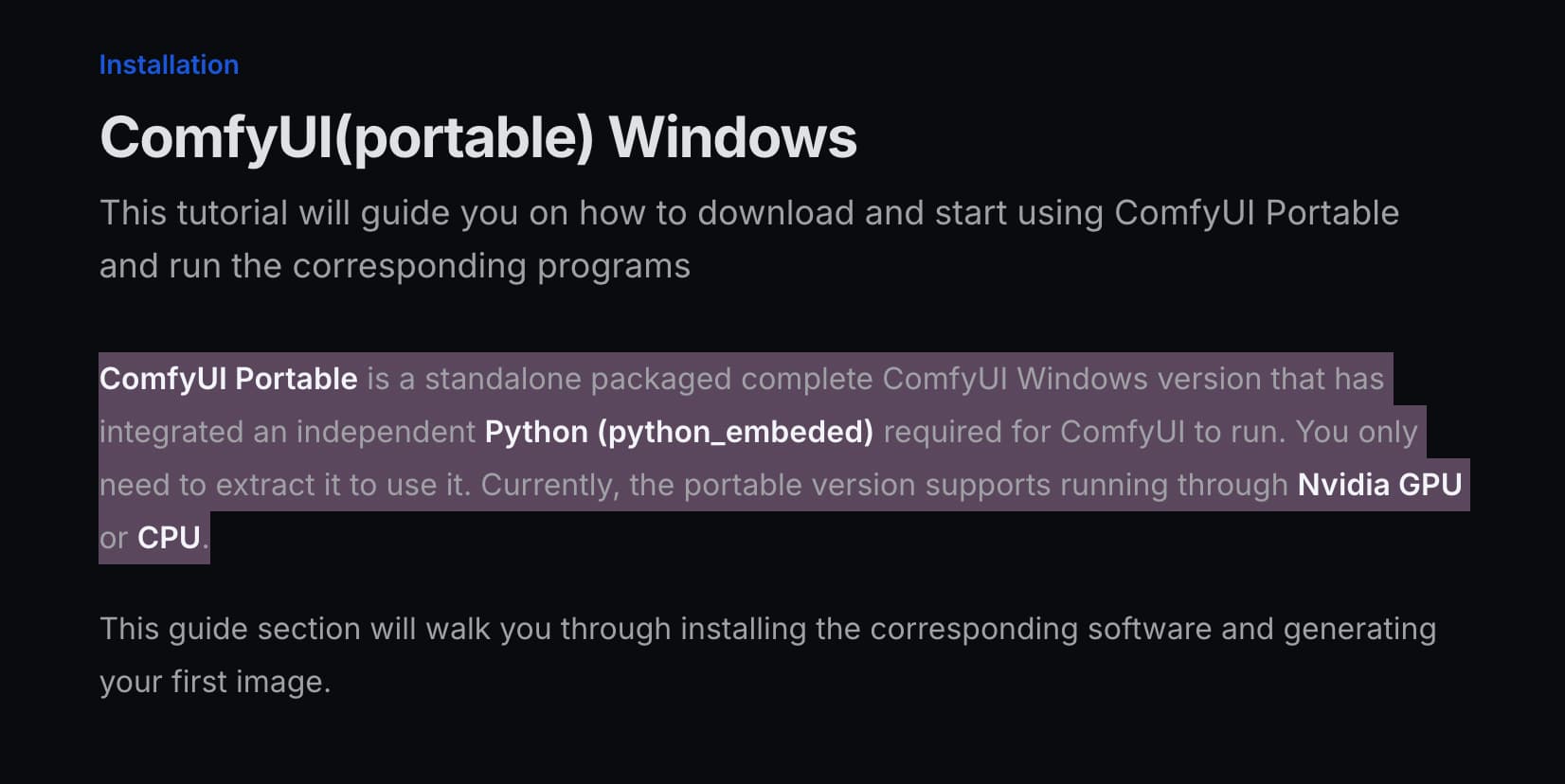

However, turns out that the operator only works when you have the Comfy windows portable version. Now, this was a real issues because I couldn’t do anything due to the compatibility. I also tried another base operator that was available in Github, but it also required an installed manager for the window portable version. So, I just tried to set my priority.

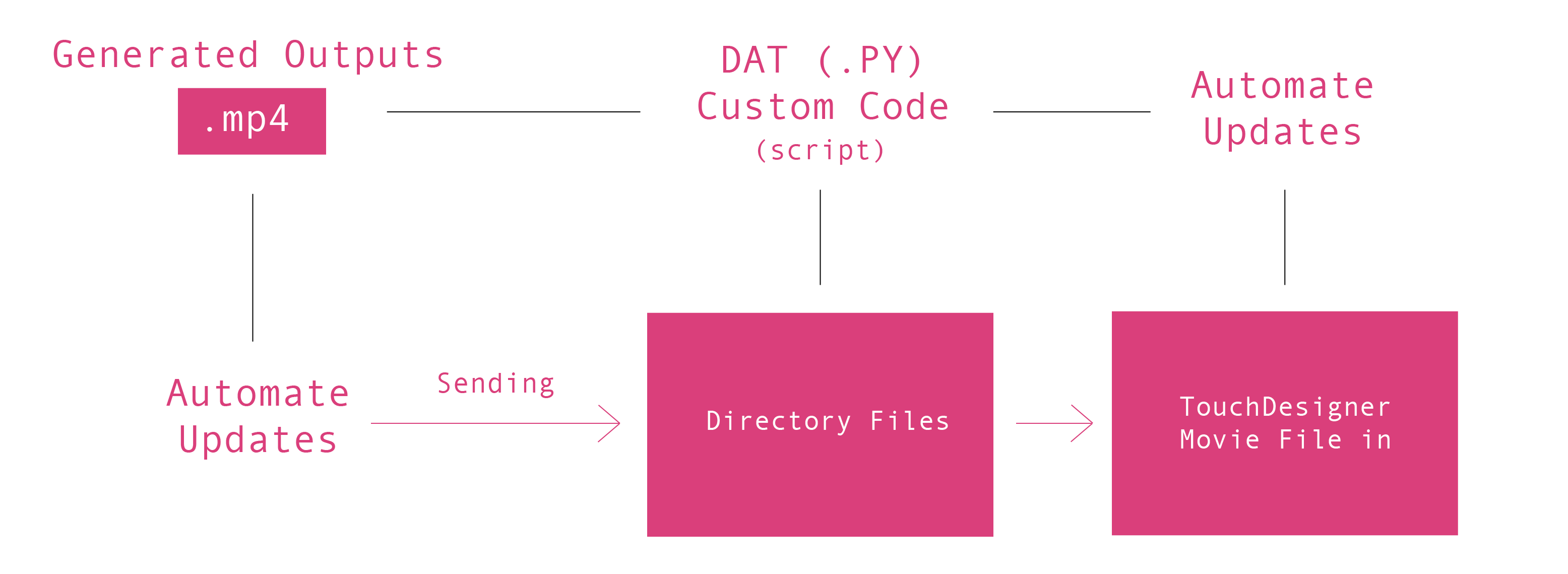

Solution

If I wanted to use TD just for its efficient interface to display the outputs without the workflows being visible, I was thinking that perhaps I could connect the visual outputs as DAT file (Operator in TD) through a directory file. The plan was to create a custom code using the DAT operator, and it will automatically updates the visuals as new visuals are generated and placed in the specific directory file. This would be a much simpler process rather than meddling with things that I have no clue about, and ended up destroying my laptop because of it.

SLOW AI

Slow AI challenges the fast-paced, data-driven nature of modern AI by encouraging a more reflective approach. It views AI not as something entirely new, but as a continuum that evolves gradually over time. Supported by critical AI researchers, designers, and artists, this non-profit community encourages meaningful discussions, writings, and creative projects that subvert corporate-first AI narratives.

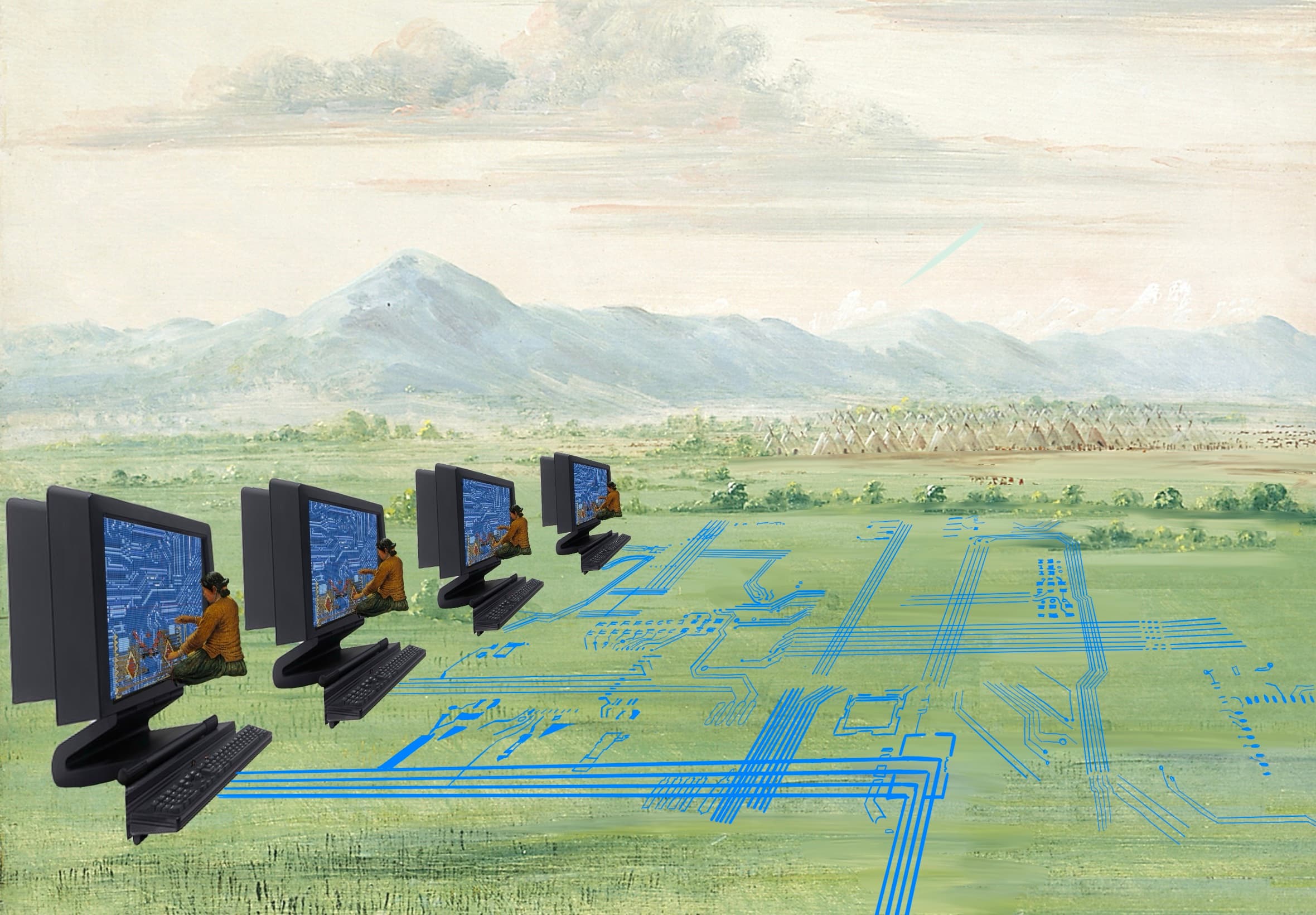

I was looking at their archive page and what I found interesting from each projects were that they combine different tools to create narratives that are not just about the AI but the process behind building the AI. Bunch of images are reused to create new images, representing AI in a more compelling way.

"Common visual tropes of glowing brains, humanoid robots and walls of code create a distorted view of AI, giving it a mystical, almost god-like quality. So, we’ve been wondering – how can we make it easier to create better images of AI?"

It's not about creating these processes easier or more accesible, however, its about putting our "time" into these processes. For example, if we were to picture these machines as a snapshot of time, we should reflect on their historical context which they were developed. These systems are built by humans, and they are influenced by the society they were developed in.

AI as a Snapshot of Time

It's not about creating these processes easier or more accesible, however, its about putting our "time" into these processes. For example, if we were to picture these machines as a snapshot of time, we should reflect on their historical context which they were developed. These systems are built by humans, and they are influenced by the society they were developed in. Recognizing AI as a snapshot of a time involves critically examining these biases and the contexts they arise from, ensuring that AI development moves towards more objective, fair, and equitable outcomes.

Reflections

As I progressed on developing my final dissertation draft, I had to came to finalizing my direction for this project. What am I trying to articulate from this? What values does it bring to the community?

Conceptual Approach

With Generative AI nowadays getting more accessible and easier to use, its rapid development has concerned us on what's real and what's fake anymore on the digital space. People are able to create images, sounds, and writings easily with AI. These accesibility has led to commercial usage, leading to fast production and consumption.

As these tools are getting simpler and easier to use, they are not concerned with meddling the processes behind these machines: what kind of data are they trained on, and what are they developed from. When it comes to generating a desired output from these machines, most people aim to make it seemed like it doesn't look like AI, or sound like AI. But why are we trying to create a reality that is not true?

Between the Artificial and Reality

Why can't we just take a moment and cherish the little mistakes and flaws generated by these machines? I feel like people are getting impatient and are trying to create a machine that is more efficient, or even better than human. But what if we take a step back and embrace the imperfections of these machines?

Somehow, this approach allows us to create an in-between reality—blurring the artificial and the real. What’s important is involving our own interpretations in the process, something often overlooked when AI is seen as an easy shortcut. With this project, I aim to highlight the value behind the processes of these generative machines. Crafting prompts is one way of communicating with them, but equally important is understanding the generative structures. This process shapes how these machines interpret inputs, reflecting the way we write, think, and imagine.