WEEK 9

MERGING MEMORY

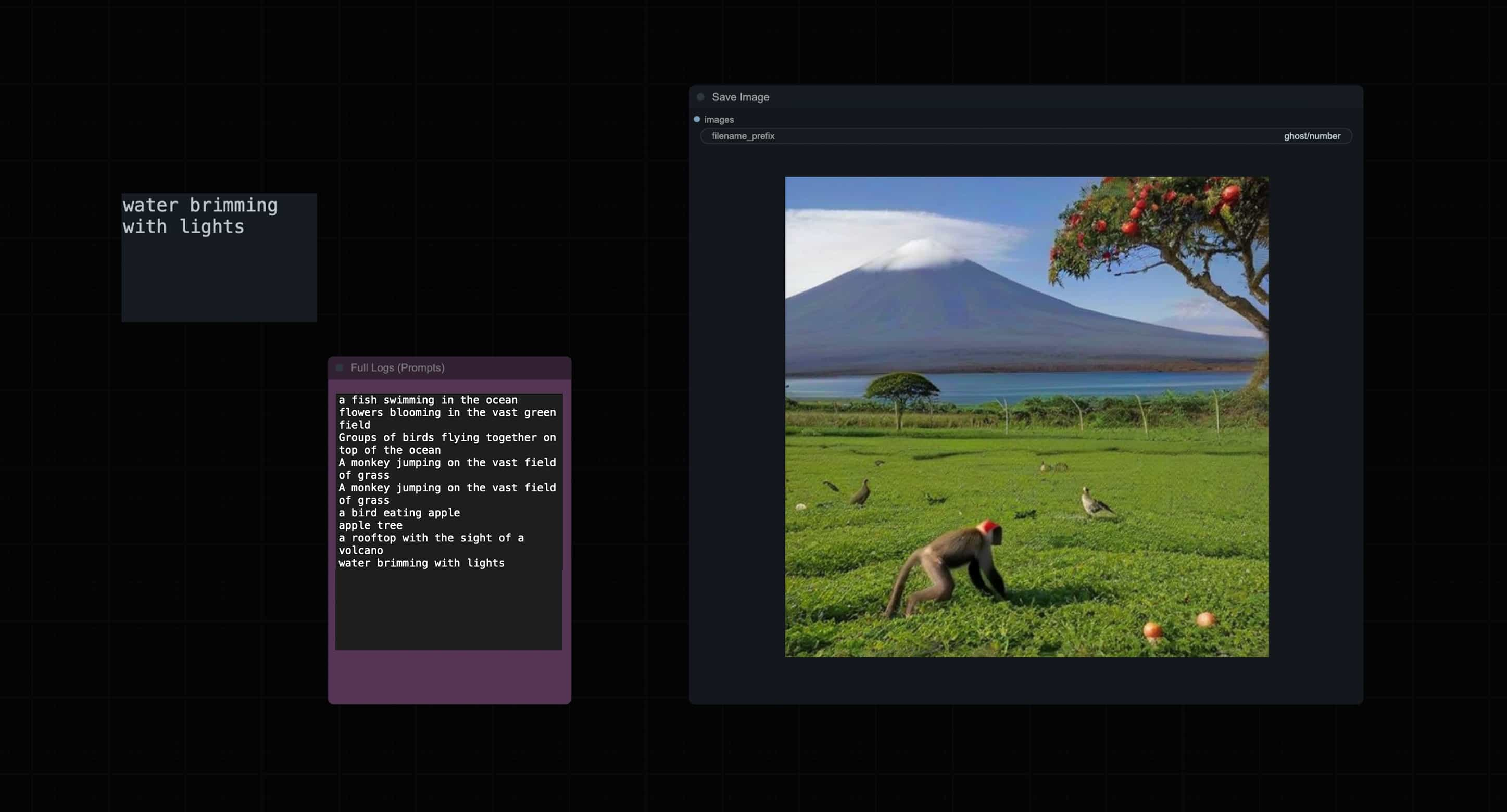

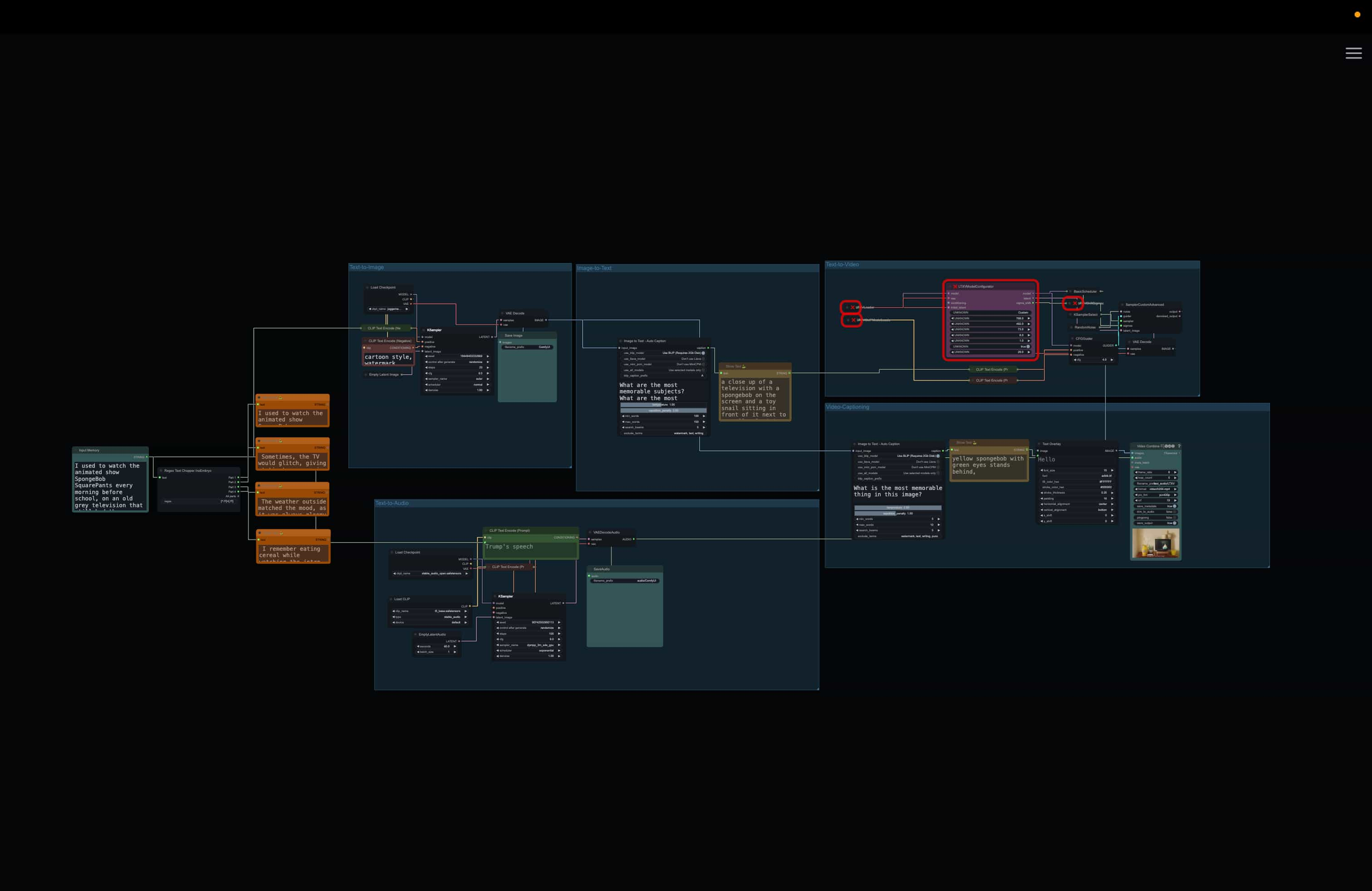

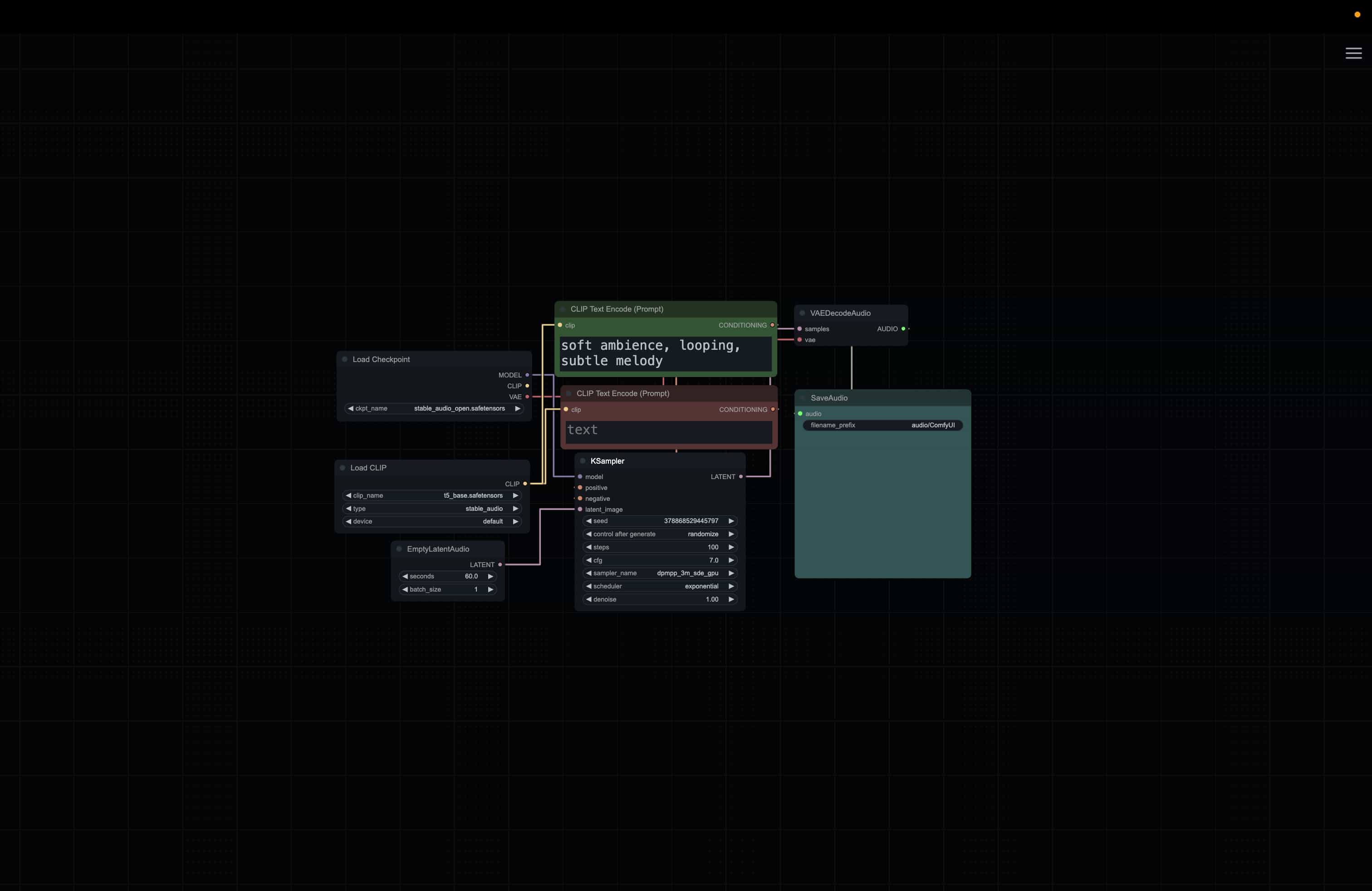

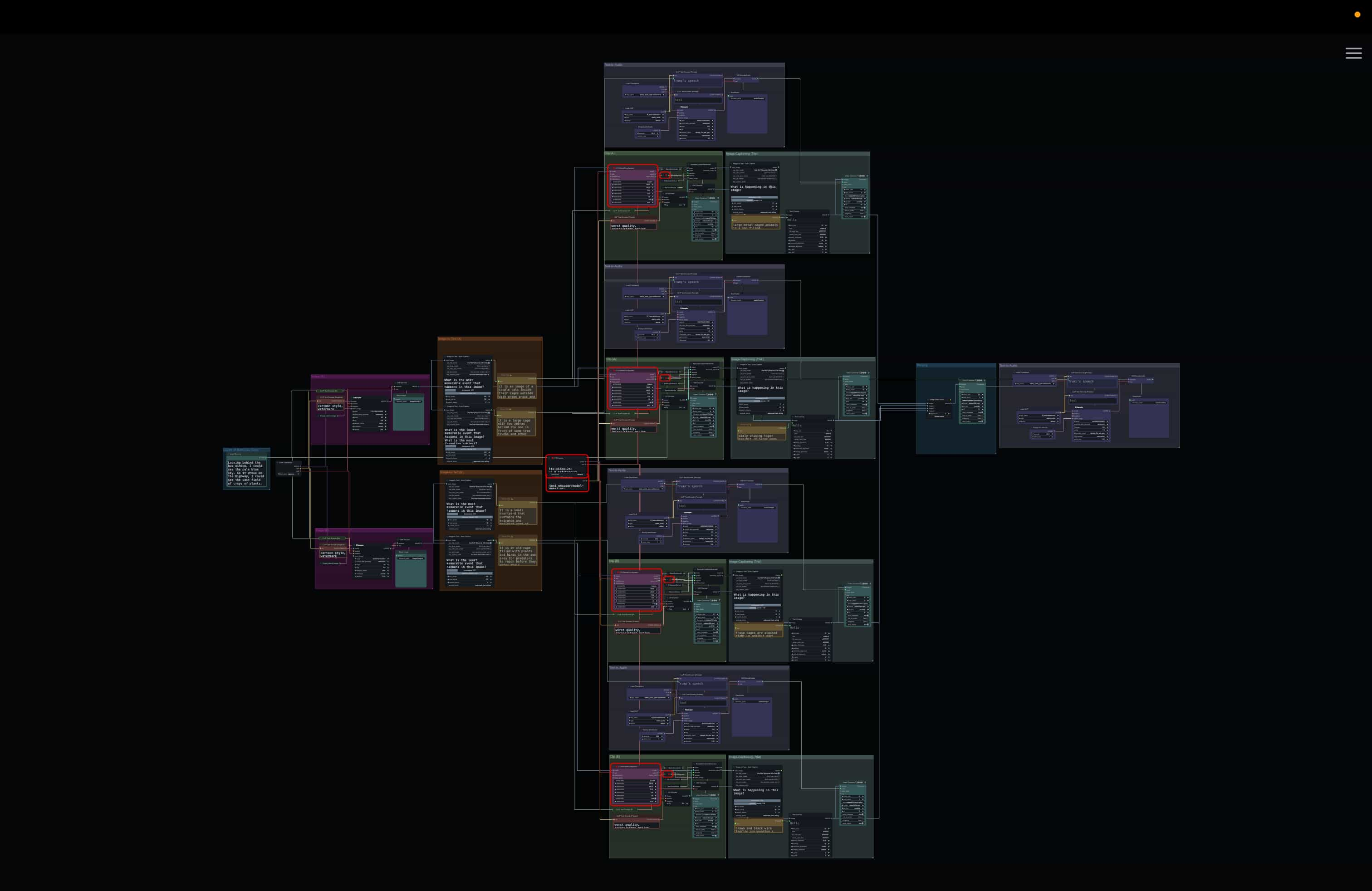

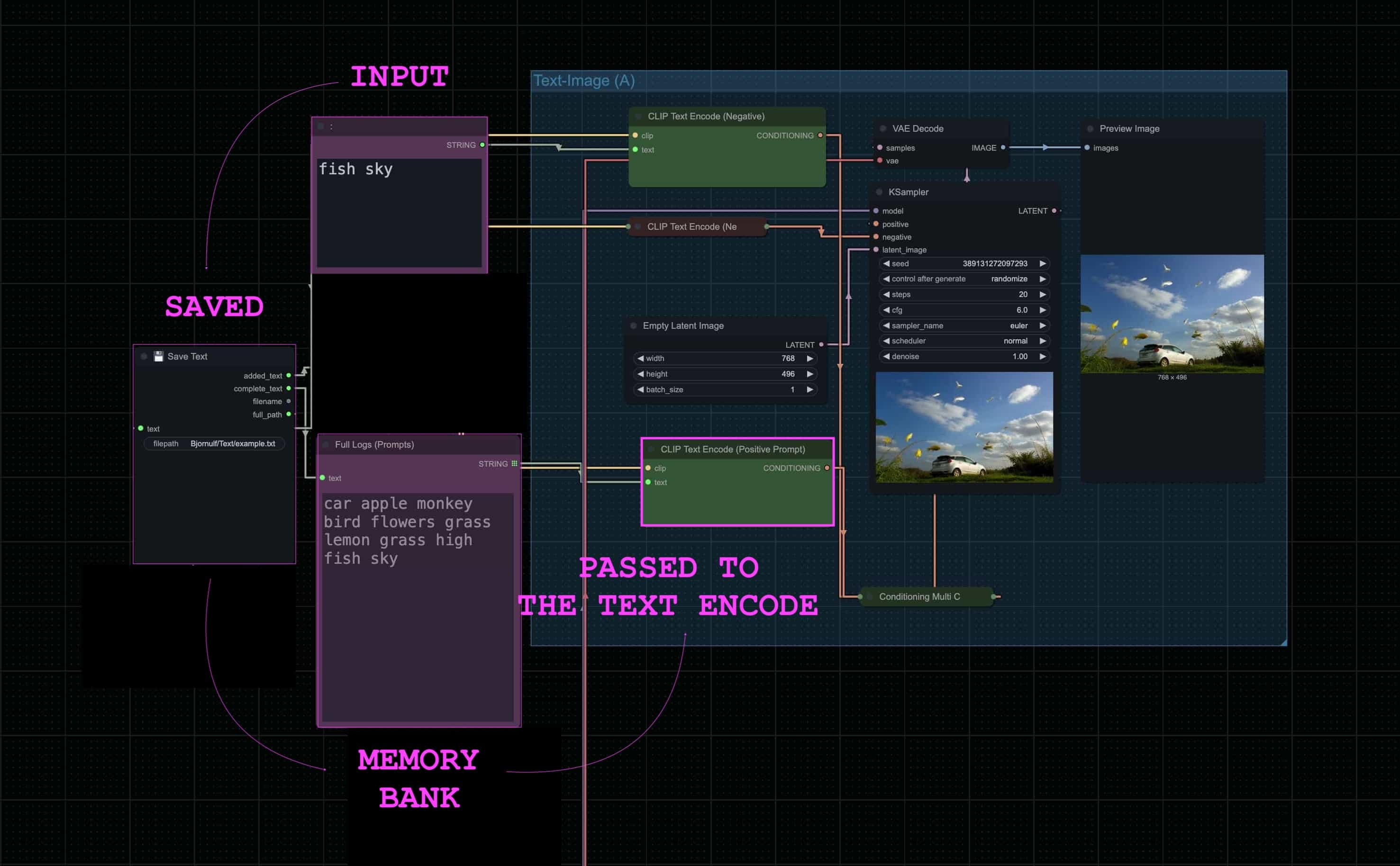

Generative Workflows I have built in ComfyUI

Generative Workflows

Creating numerous workflows has brought me a lot of knowledges on using ComfyUI for AI generated contents. From creating outcomes through text-to-image, to image-to-videos generations; exploring the tool has given me the basic knowledge of creating my own generative workflows. Along the way, I was able to make use of the tool, controlling the prompts to create unexpected results.

Bringing back the question of my research, What makes an experience truly human?

I aim to explore that question by focusing on human memory, which is something that can’t be fully captured through datasets or accurate measurements. Our memories shift over time; they blur, fade, and blend with other moments.

Interestingly, machine memory is also fluid in its own way. This can be shown in the outcomes that I have generated up until now. While they are often seen as rigid or repetitive, the machine doesn’t always behave the same way, either. The way that the objects are shaped, placed, and seen in different scenarios — they are made up of the range of possibilities that the machine sampled from the data that they are trained on.

So how can I use AI to highlight the differences between how we remember and how machines do?

AI Images generated by ChatGPT

Merging Memory

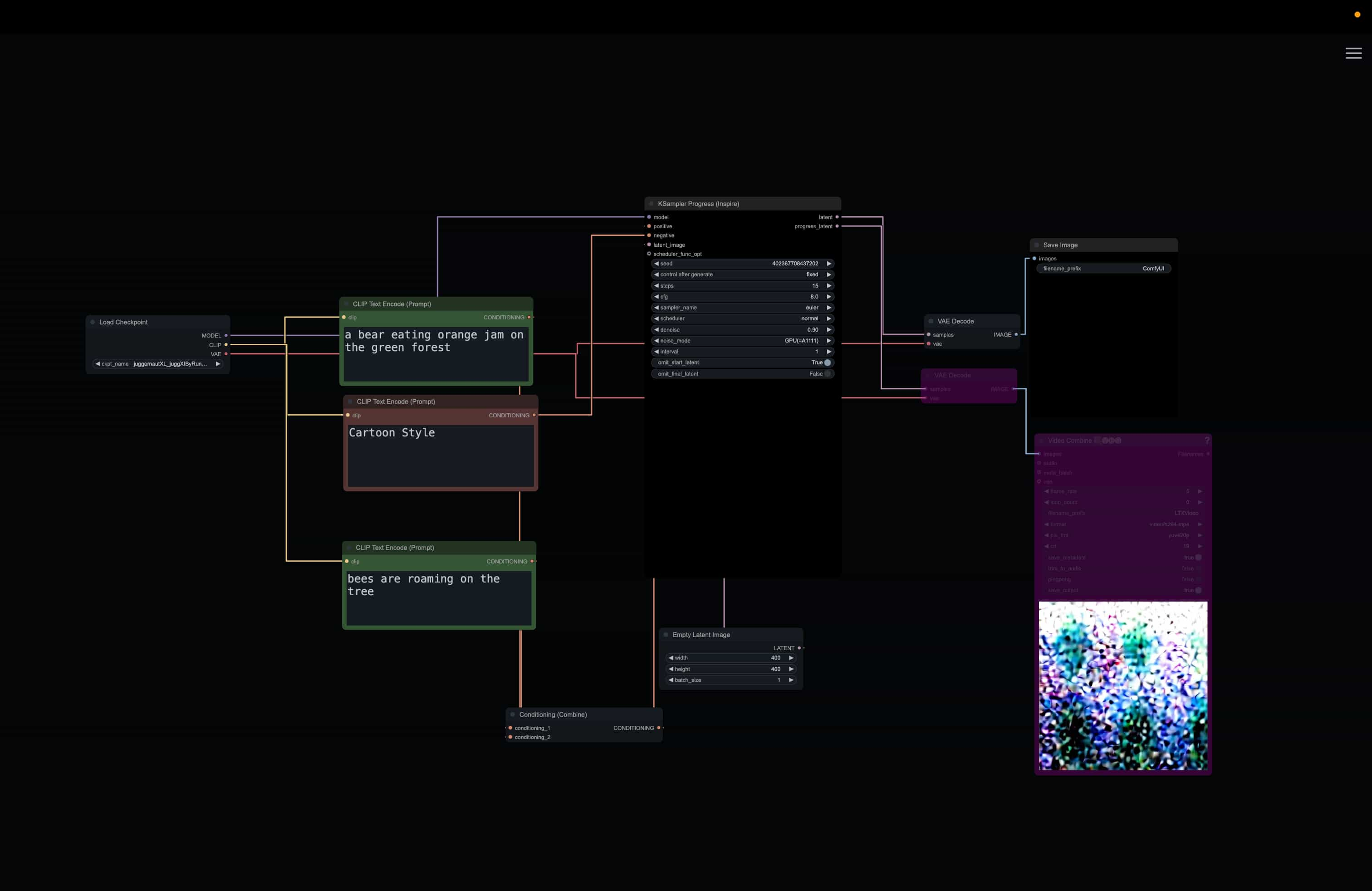

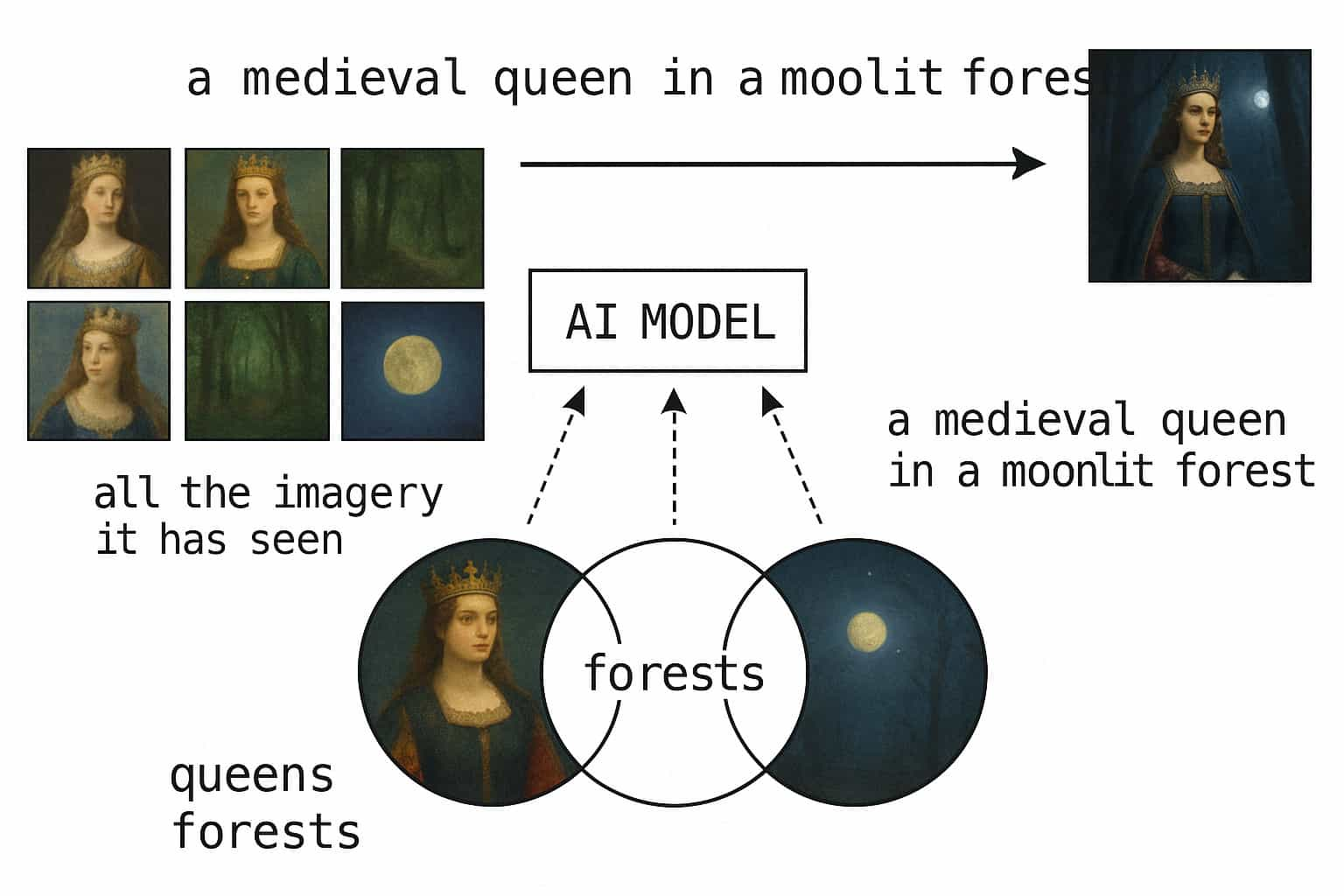

When an AI creates an image, it doesn't just pull from a single image it was trained on. Instead, it’s learned patterns, styles, and concepts from many images during training, sometimes millions of them. So when you give it a prompt like

“a medieval queen in a moonlit forest,”

the model draws on all the imagery it has seen related to queens, forests, moonlight, etc., and then generates something that captures the essence or average vibe of those elements.

It’s creating something that reflects what it's learned about those ideas statistically

I believe that this process is what makes them unbearably repetitive, yet fluid in its own way. I want to show this process to understand how memory, both human and machine, can be constructed, distorted, and reinterpreted.

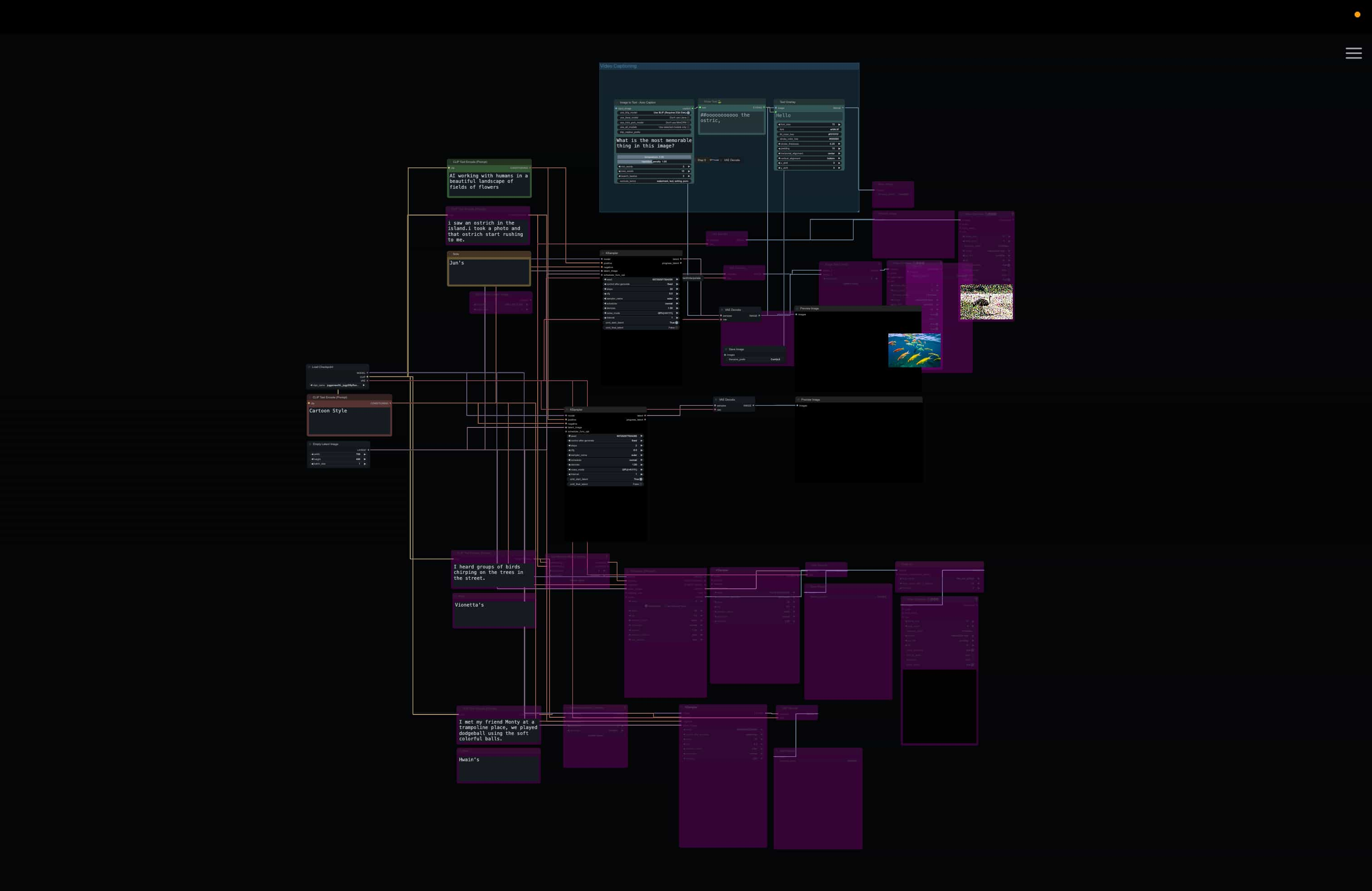

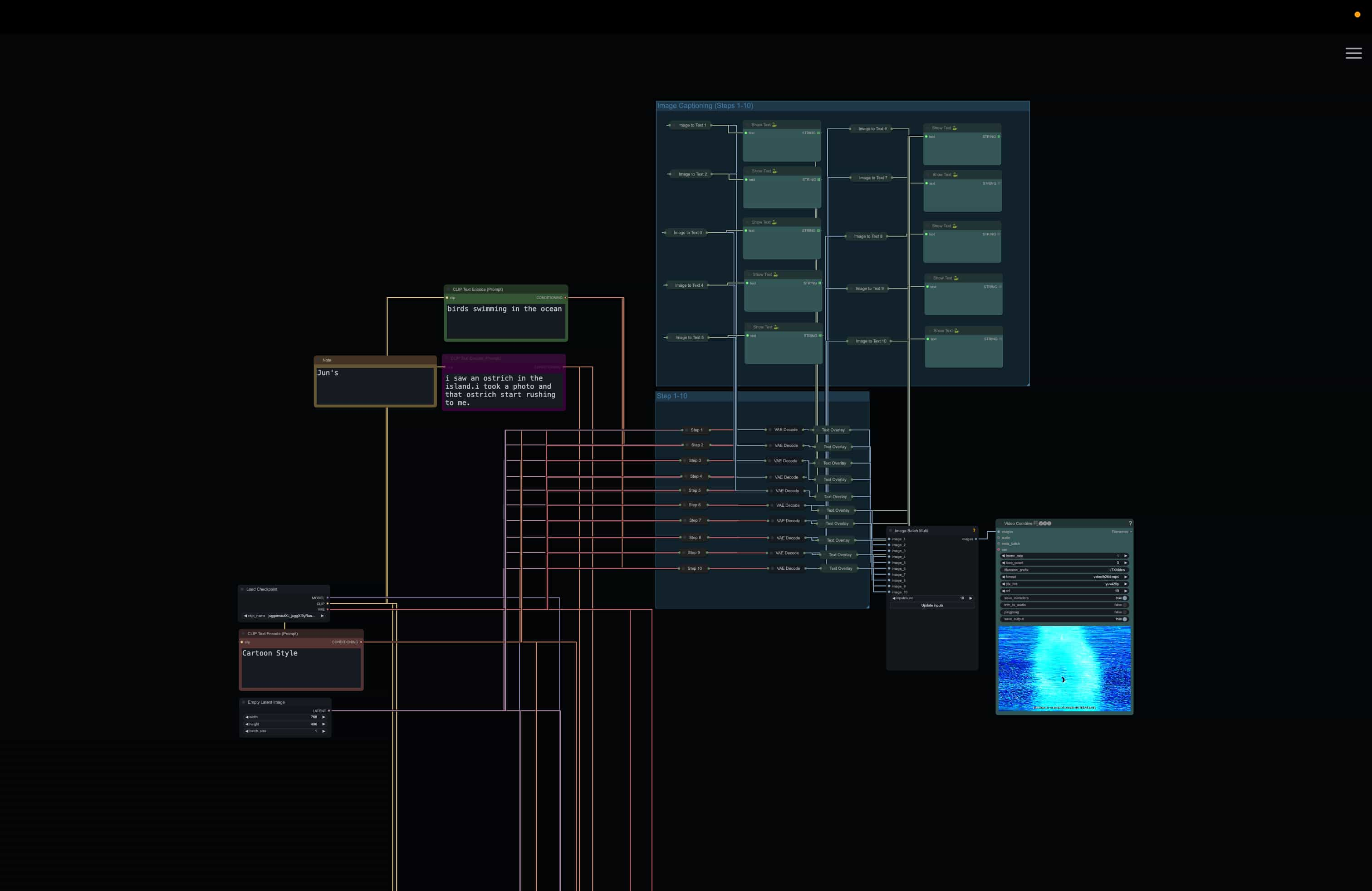

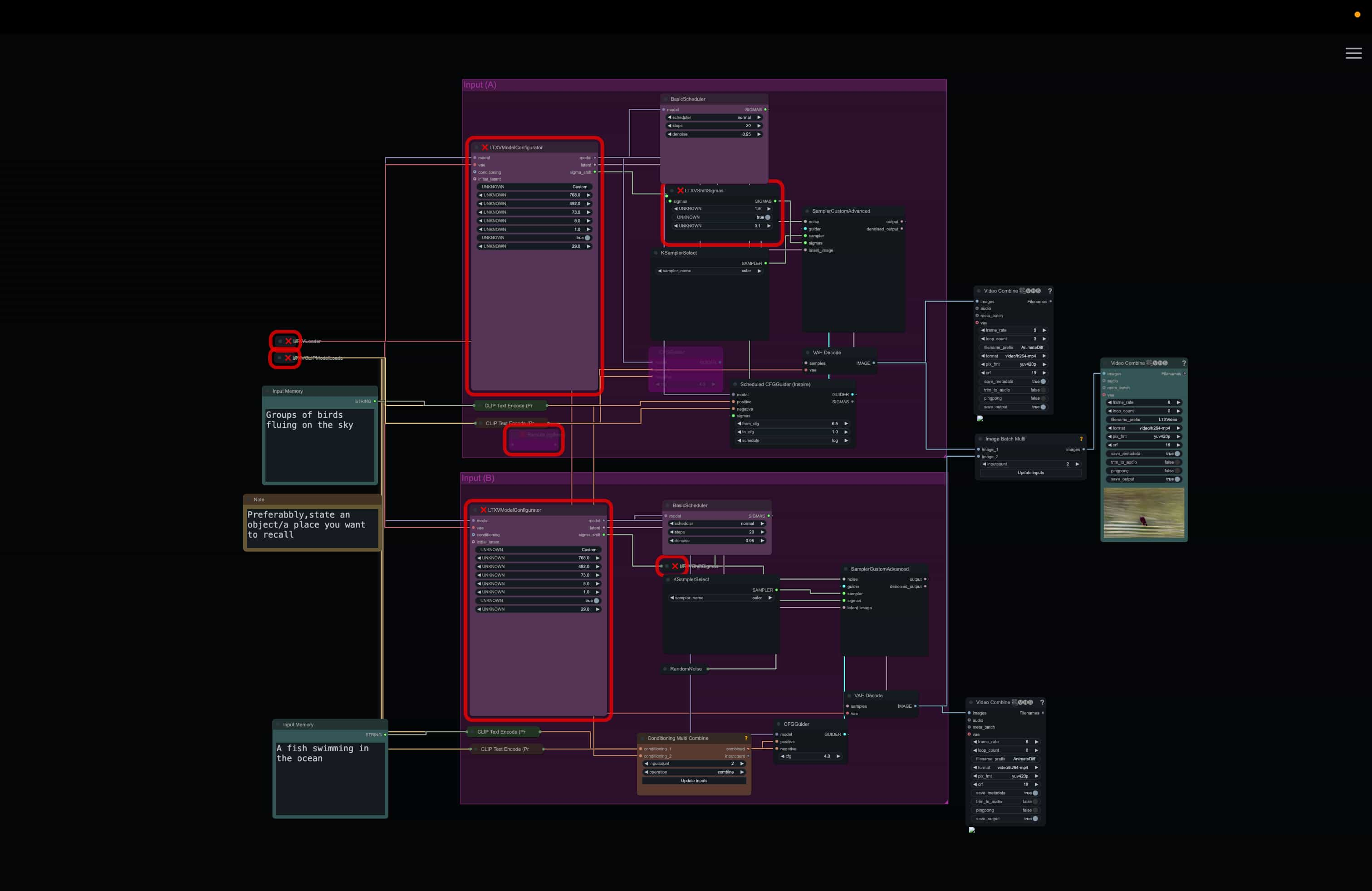

Thus, is there a way for me to create my own generative workflow that can act as a memory visualizer? not just a singular process, where each person writes a prompt, and the machine will forget the previous prompt; instead as a collective process, where the machine merges each prompt as more inputs are written.

Our memory doesn’t live alone, but it collectively grows as time passed

This also shows the idea whether our memory doesn’t exist as a singular past event, but it is an active process that influence our perception, existing in both past and present time, blending as time passed (Bergson).

Source DALL·E: Creating images from text

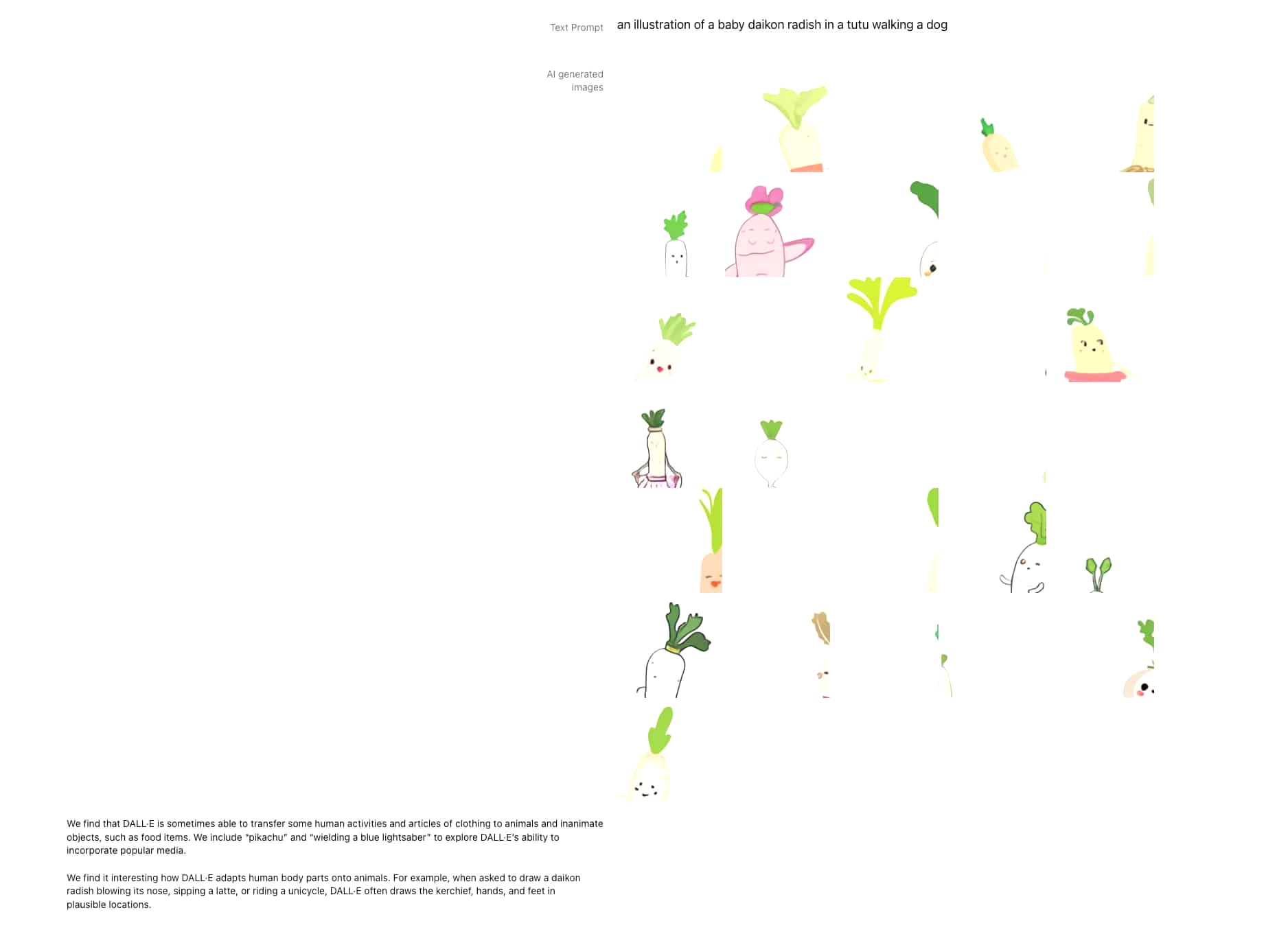

1. A deer eating grass

2. A monkey jumping on the grass

3. A flower blooming

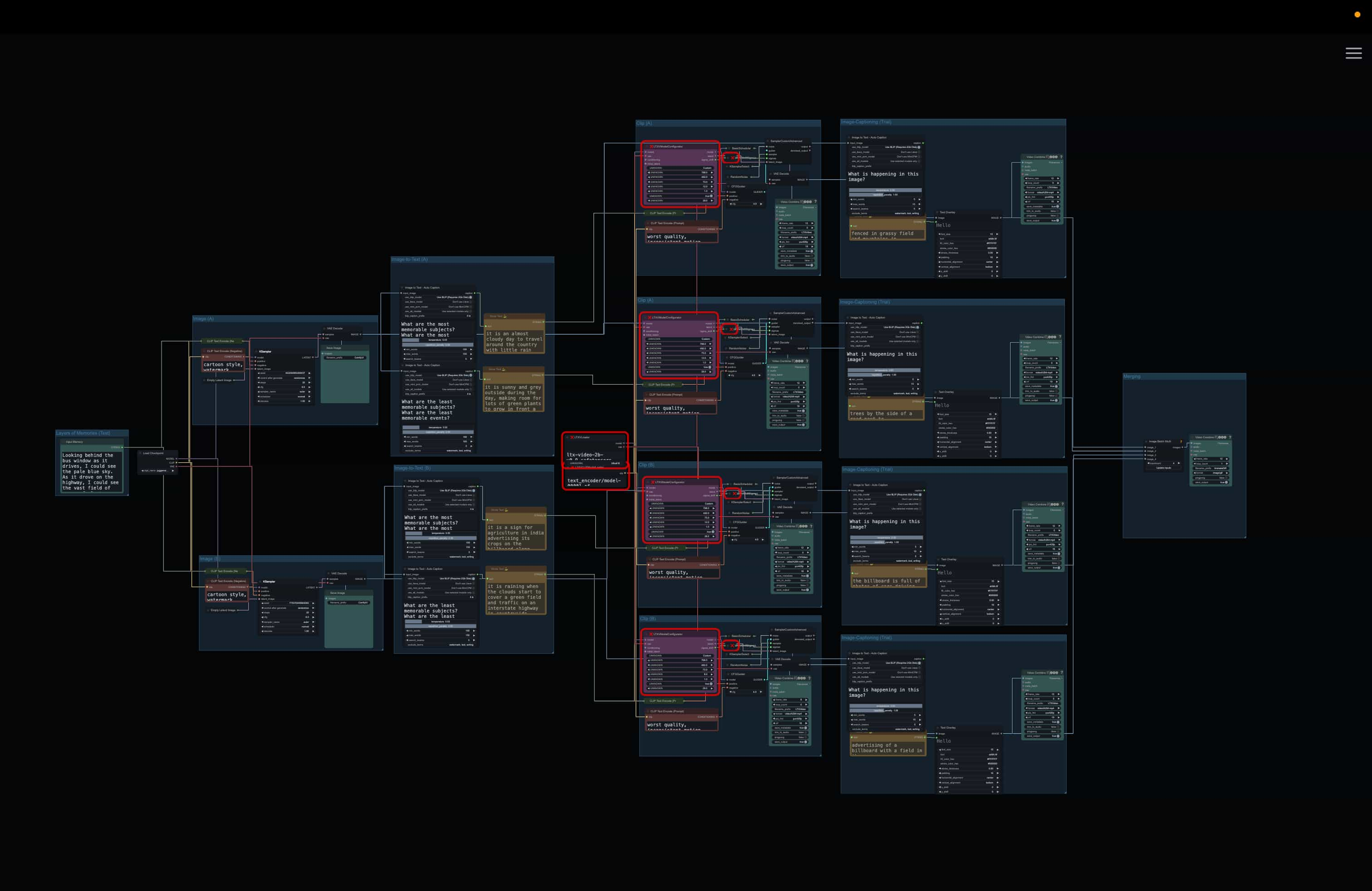

Comparisons of the two nodes

Conditioning Node

To create a generative workflow that merges every prompt that is passed, I had to understand how would the prompt flows in and out. At the current workflow, the prompt is connected as singular input connected to the positive CLIP conditioning. Firstly, I had to find a node that functions to combine conditionings.

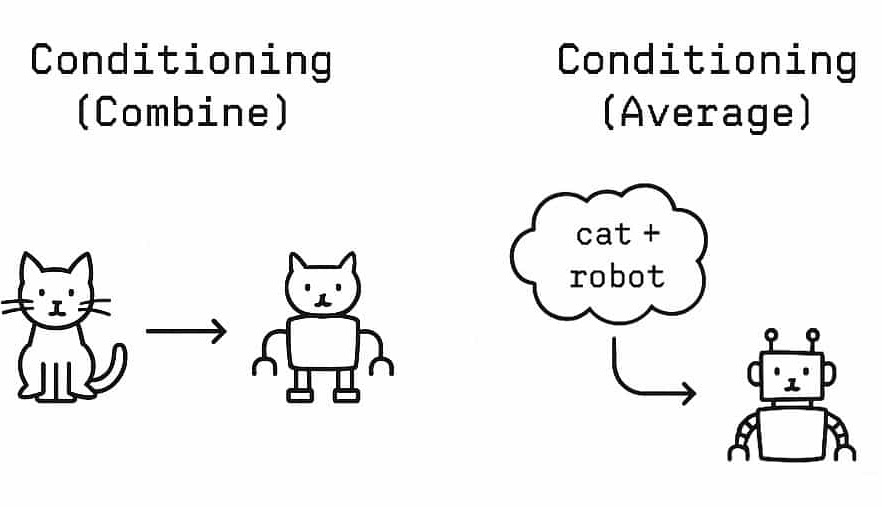

There are two conditioning nodes that can merge two conditionings: Conditioning (Combine) and Conditioning (Average). What are the differences?

Conditioning (Combine) works by letting the diffusion model generate noise predictions for each conditioning separately, then averaging those predictions to guide the final output. In contrast, Conditioning (Average) blends the text embeddings themselves before the generation even begins, creating a unified conditioning input for the model.

Imagine you're painting with help from two artists, one loves cats, the other adores robots. With Conditioning (Combine), each artist paints their own version of the scene, and then you merge the two paintings into one.

With Conditioning (Average), the artists first brainstorm together, blend their ideas into a single concept, and then create one cohesive painting from that shared vision.

Illustration generated by ChatGPT

Workflow Process

Memory Bank

The next step is to create the memory bank, in which it functions to store every prompt that has been passed to the CLIP conditioning. To do this, I found a node that acts as a prompt stash, where it can passed the prompts as text strings and stored it as plain text in the text files.

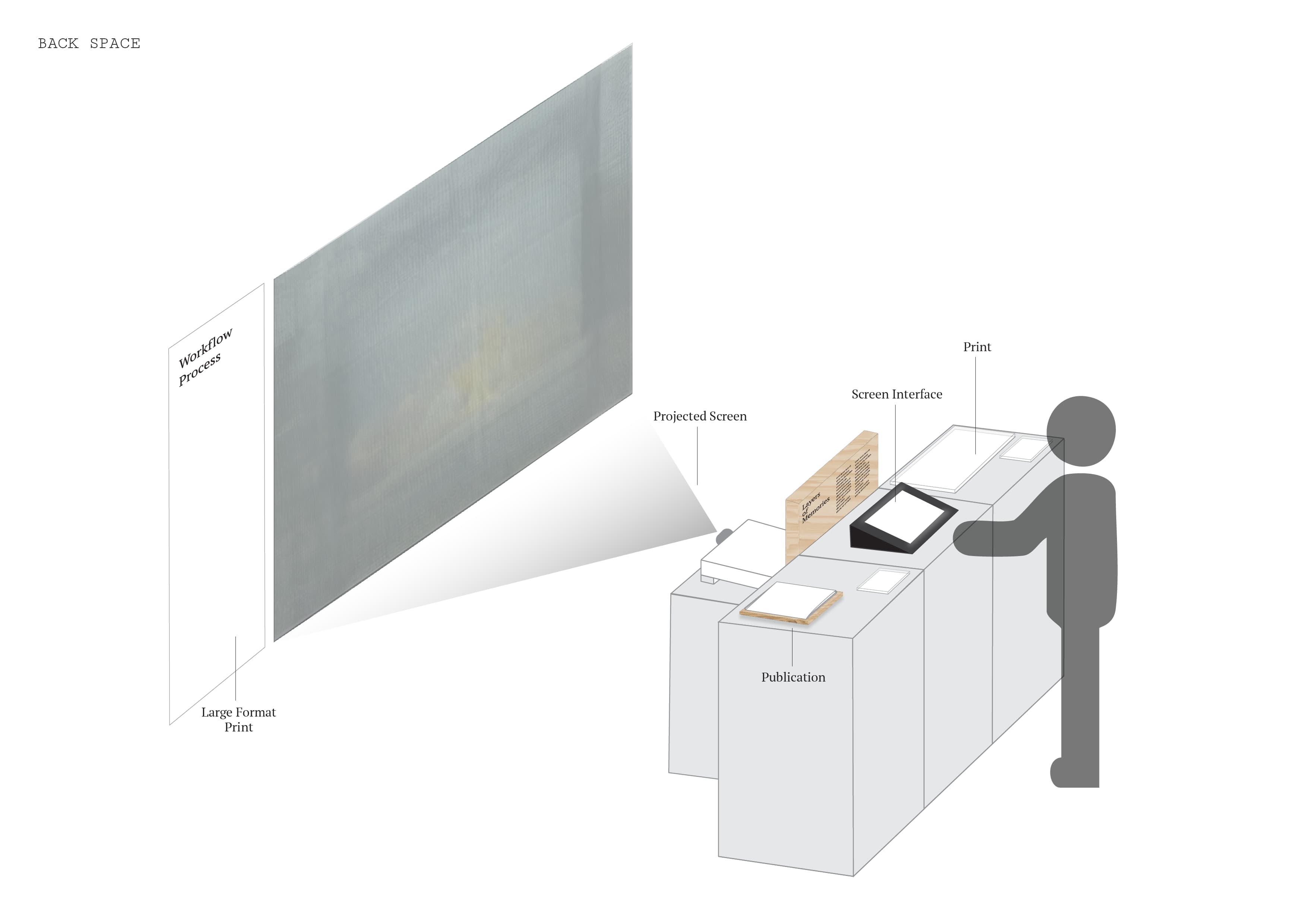

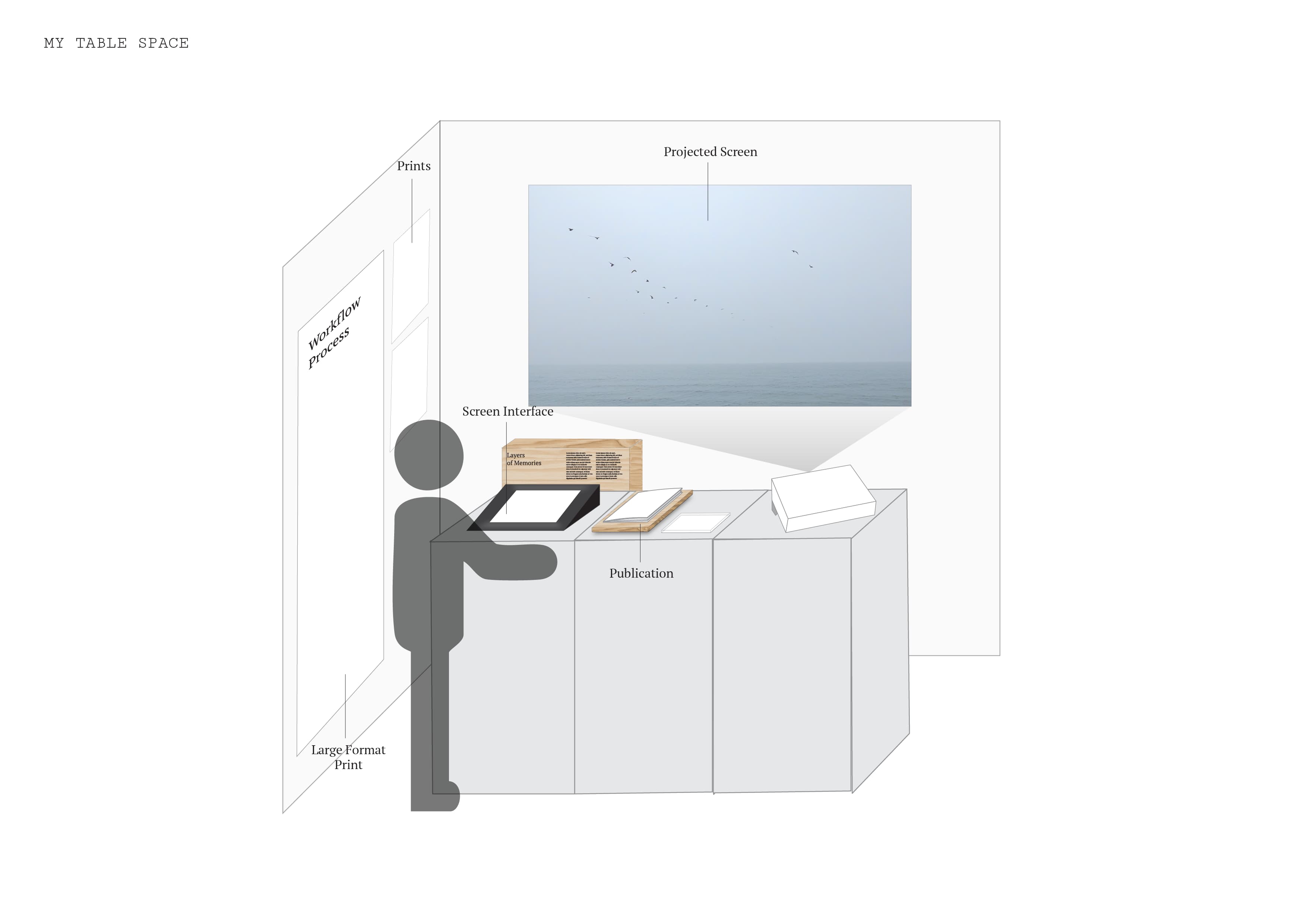

Table Set-Up

I have sorted out plans for my table setup during Open Studio. It’s an ideal sketch of how am I going to present each individual works, however, it could indeed change depending on the space.

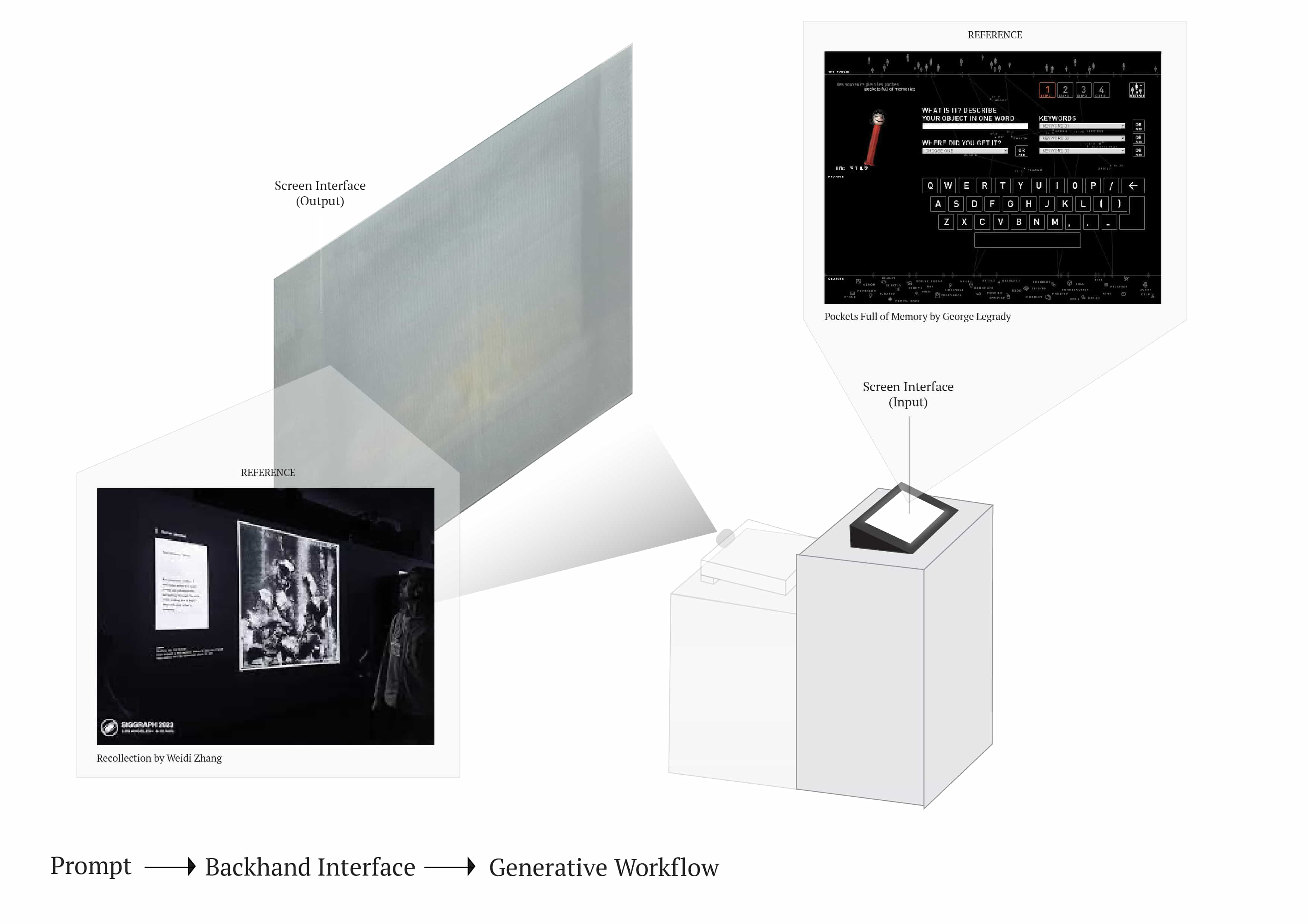

Main Installation

The main installation would be my challenge to make the generative workflow that I built to be interactive, meaning people can input their own words and the main will transform it into visuals during live-time.

This will requires me to create a front-end and back-hand system for the live-time experience to work. I will need to create an interface for the people to input their prompts, and the front-end system will pass it to the back-hand for it to pass it again to the workflow that I made. This although seems complicated, but it could be possible through using the API key calls as ComfyUI has many external plug-ins.

Prints (Posters, Publication)

I could print out the workflows in large format and lay them on the wall beside the projected screen. I haven’t thought of the layout yet, but one thing that I need to keep in mind is that I could make it less technical and add more visuals in terms of the process.

Human-Machine Memory

For my Semester 1 works, I aimed to reuse the website that I made as part of the documentation for the open studio. I can just use another i-pad as the screen device for people to interact with .

Final Reflection

The table setup that I have planned will depend on the space that I had. I need to think about how to make use of the materials such as woods and acrylics to elevate my setup, perhaps create a frame for my i-pad, and use transparent acrylic to cover my prints.

I also need to think about how am I going to build the visual identity of the project, such as what type do I use, and what color do I want to use. These little details may impact on how the viewers will see my setup, and it will make it easier for me to design my print outs if I have consistent type that I used, and a visual structure that I have.